Long time readers will know that I bloody love a Gaussian process (GP). I wrote an extremely detailed post on the various ways to define Gaussian processes. And I did not do that because I just love inflicting Hilbert spaces on people. In fact, the only reason that I ever went beyond the standard operational definition of GPs that most people live their whole lives using is that I needed to.

Twice.

The first time was when I needed to understand approximation properties of a certain class of GPs. I wrote a post about it. It’s intense1.

The second time that I really needed to dive into their arcana and apocrypha2 was when I foolishly asked the question can we compute Penalised Complexity (PC) priors3 4 for Gaussian processes?.

The answer was yes. But it’s a bit tricky.

So today I’m going to walk you through the ideas. There’s no real need to read the GP post before reading the first half of this one5, but it would be immensely useful to have at least glanced at the post on PC priors.

This post is very long, but that’s mostly because it tries to be reasonably self-contained. In particular, if you only care about the fat stuff, you really only need to read the first part. After that there’s a long introduction to the theory of stationary Gaussian processes. All of this stuff is standard, but it’s hard to find collected in one place all of the things that I need to derive the PC prior. The third part actually derives the PC prior using a great deal of methods from the previous part.

Part 1: How do you put a prior on parameters of a Gaussian process?

We are in the situation where we have a model that looks something like this6 7 \[\begin{align*} y_i \mid \beta, u, \theta &\sim p(y_i \mid \beta, u, \phi) \\ u(\cdot) \mid \theta &\sim GP(0, c_\theta) \\ \beta, \phi &\sim p(\beta,\phi), \end{align*}\] where \(c_\theta(\cdot,\cdot)\) is a covariance function with parameters \(\theta\) and we need to specify a joint prior on the GP parameters \(\theta\).

The simplest case of this would be GP regression, but a key thing here is that, in general, the structure (or functional form) of the priors on \(\theta\) probably shouldn’t be too tightly tied to the specific likelihood. Why do I say that? Well the scaling of a GP should depend on information about the likelihood, but it’s less clear that anything else in the prior needs to know about the likelihood.

Now this view is predicated on us wanting to make an informative prior. In some very special cases, people with too much time on their hands have derived reference priors for specific models involving GPs. These priors care deeply about which likelihood you use. In fact, if you use them with a different model8, you may not end up with a proper9 posterior. We will talk about those later.

To start, let’s look at the simplest way to build a PC prior. We will then talk about why this is not a good idea.

A first crack at a PC prior

As always, the best place to start is the simplest possible option. There’s always a hope10 that we won’t need to pull out the big guns.

So what is the simplest solution? Well it’s to treat a GP as just a specific multivariate Gaussian distribution \[ u \sim GP(0, \sigma^2R(\theta)), \] where \(R(\theta)\) is a correlation matrix.

The nice thing about a multivariate Gaussian is that we have a clean expression for its Kullback-Leibler divergence. Wikipedia tells us that for an \(n\)-dimensional multivariate Gaussian \[ 2\operatorname{KL}(N(0, \Sigma) || N(0, \Sigma_0)) = \operatorname{tr}\left(\Sigma_0^{-1}\Sigma\right) + \log \det \Sigma_0 - \log \det \Sigma- n. \] To build a PC prior we need to consider a base model. That’s tricky in generality, but as we’ve assumed that the covariance matrix can be decomposed into the variance \(\sigma^2\) and a correlation matrix \(R(\theta)\), we can at least specify an easy base model for \(\sigma\). As always, the simplest model is one with no GP in it, which corresponds to \(\sigma_\text{base} = 0\). From here, we can follow the usual steps to specify the PC prior \[ p(\sigma) = \lambda e^{-\lambda \sigma}, \] where we choose \(\lambda = \log(\alpha)/U\) for some upper bound \(U>0\) and some tail probability \(0<\alpha<1\) so that \[ \Pr(\theta > U) = \alpha. \] The specific choice of \(U\) will depend on the context. For instance, if it’s logistic regression we probably want something like11 \(U=1\). If we have a GP on the log-mean of a Poisson distribution, then we probably want \(U < 21.5\) if you want the mean of the Poisson distribution to be less than the maximum integer12 in R. In most data, you’re gonna want13 \(U\ll 5\). If the GP is on the mean of a normal distribution, the choice of \(U\) will depend on the context and scaling of the data.

Without more assumptions about the form of the covariance function, it is impossible to choose a base model for the other parameters \(\theta\).

That said, there is one special case that’s important: the case where \(\sigma = \ell\) is a single parameter controlling the intrinsic length scale, that is the distance at which the correlation between two points \(\ell\) units apart is approximately zero. The larger \(\ell\) is, the more correlated observations of the GP are and, hence, the less wiggly its realisation is. On the other hand, as \(\ell \rightarrow 0\), the observations GP often behaves like realisations from an iid Gaussian and the GP becomes14 wilder and wilder.

This suggests that a good base model for the length-scale parameter would be \(\ell_0 = \infty\). We note that if both the base model and the alternative have the same value of \(\sigma\), then it cancels out in the KL-divergence. Under this assumption, we get that \[ d(\ell \mid \sigma) = \text{``}\lim_{\ell_0\rightarrow \infty}\text{''} \sqrt{\operatorname{tr}\left(R(\ell_0)^{-1}R(\ell)\right) - \log \det R(\ell) + \log \det R(\ell_0) - n}, \] where I’m being a bit cheeky putting that limit in, as we might need to do some singular model jiggery-pokery of the same type we needed to do for the standard deviation. We will formalise this, I promise.

As the model gets more complex as the length scale decreases, we want our prior to control the smallest value \(\ell\) can take. This suggests we want to choose \(\lambda\) to ensure \[ \Pr(\ell < L) = \alpha. \] How do we choose the lower bound \(L\)? One idea is that our prior should have very little probability of the length scale being smaller than the length-scale of the data. So we can chose \(L\) to be the smallest distance between observations (if the data is regularly spaced) or as a low quantile of the distribution of distances between nearest neighbours.

All of this will specify a PC prior for a Gaussian process. So let’s now discuss why that prior is a bit shit.

What’s bad about this?

The prior on the standard deviation is fine.

The prior on the length scale is more of an issue. There are a couple of bad things about this prior. The first one might seem innocuous at first glance. We decided to treat the GP as a multivariate Gaussian with covariance matrix \(\sigma^2 R(\theta)\). This is not a neutral choice. In order to do it, we need to commit to a certain set of observation locations15. Why? The matrix \(R(\theta)\) depends entirely on the observation locations and if we use this matrix to define the prior we are tied to those locations.

This means that if we change the amount of data in the model we will need to change the prior. This is going to play havoc16 on any sort of cross-validation! It’s worth saying that the other two sources of information (the minimum length scale and the upper bound on \(\sigma\)) are not nearly as sensitive to small changes in the data. This information is, in some sense, fundamental to the problem at hand and, therefore, much more stable ground to build your prior upon.

There’s another problem, of course: this prior is expensive to compute. The KL divergence involves computing \(\operatorname{tr}(R(\ell_0)^{-1}R(\ell))\) which costs as much as another log-density evaluation for the Gaussian process (which is to say it’s very expensive).

So this prior is going to be deeply inconvenient if we have varying amounts of data (through cross-validation or sequential data gathering). It’s also going to be wildly more computationally expensive than you expect a one-dimensional prior to be.

All in all, it seems a bit shit.

The Matérn covariance function

It won’t be possible to derive a prior for a general Gaussian process, so we are going to need to make some simplifying assumptions. The assumption that we are going to make is that the covariance comes from the Whittle-Matérn17 18 class \[ c(s, s') = \sigma^2 \frac{2^{1-\nu}}{\Gamma(\nu)}\left(\sqrt{8\nu}\frac{\|s-s'\|}{\ell}\right)^\nu K_\nu\left(\sqrt{8\nu}\frac{\|s-s'\|}{\ell}\right), \] where \(\nu\) is the smoothness parameter, \(\ell\) is the length-scale parameter, \(\sigma\) is the marginal standard deviation, and \[ K_\nu(x) = \int_0^\infty e^{-x\cosh t}\cosh(\nu t)\,dt \] is the modified Bessel19 function of the second kind.

This class of covariance function is extremely important in practice. It interpolates between two of the most common covariance functions:

- when \(\nu = 1/2\), it corresponds to the exponential covariance function,

- when \(\nu = \infty\), it corresponds to the squared exponential covariance.

There are years of experience suggesting that Matérn covariance functions with finite \(\nu\) will often perform better than the squared exponential covariance.

Common practice is to fix20 the value of \(\nu\). There are a few reasons for this. One of the most compelling practical reasons is that we can’t easily evaluate its derivative, which rules out most modern optimisation and MCMC algorithms. It’s also very difficult to think about how you would set a prior on it. The techniques in this post will not help, and as far as I’ve ever been able to tell, nothing else will either. Finally, you could expect there to be horrible confounding between \(\nu\), \(\ell\), and \(\sigma\), which will make inference very hard (both numerically and morally).

It turns out that even with \(\nu\) fixed, we will run into a few problems. But to understand those, we are going to need to know a bit more about how inferring parameters in a Gaussian processes actually works.

Just for future warning, I will occasionally refer to a GP with a Matérn covariance function as a “Matérn field”21.

Asymptotic? I barely know her!

Let’s take a brief detour into classical inference for a moment and ask ourselves when can we recover the parameters of a Gaussian process? For most models we run into in statistics, the answer to that question is when we get enough data. But for Gaussian processes, the story is more complex.

First of all, there is the very real question of what we mean by getting more data. When our observations are iid, this so easy that when asked how she got more data, Kylie just said she “did it again”.

But this is more complex once data has dependence. For instance, in a multilevel model you could have the number of groups staying fixed while the number of observations in each group goes to infinity, you could have the number of observations in each group staying fixed while the number of groups go to infinity, or you could have both22 going to infinity.

For Gaussian processes it also gets quite complicated. Here is a non-exhaustive list of options:

- You observe the same realisation of the GP at an increasing number of points that eventually cover the whole of \(\mathbb{R}^d\) (this is called the increasing domain or outfill regime); or

- You observe the same realisation of the GP at an increasing number of points that stay within a fixed domain (this is called the fixed domain or infill regime); or

- You observe multiple realisations of the same GP at a finite number of points that stay in the same location (this does not have a name, in space-time it’s sometimes called monitoring data); or

- You observe multiple realisations of the same GP at a (possibly different) finite number of points that can be in different locations for different realisations; or

- You observe realisations of a process that evolves in space and time (not really a different regime so much as a different problem).

One of the truly unsettling things about Gaussian processes is that the ability to estimate the parameters depends on which of these regimes you choose!

Of course, we all know that asymptotic regimes are just polite fantasies that statisticians concoct in order to self-soothe. They are not reflections on reality. They serve approximately the same purpose23 as watching a chain of Law and Order episodes.

The point of thinking about what happens when we get more data is to use it as a loose approximation of what happens with the data you have. So the real question is which regime is the most realistic for my data?.

One way you can approach this question is to ask yourself what you would do if you had the budget to get more data. My work has mostly been in spatial statistics, in which case the answer is usually24 that you would sample more points in the same area. This suggests that fixed-domain asymptotics is a good fit for my needs. I’d expect that in most GP regression cases, we’re not expecting25 that further observations would be on new parts of the covariate space, which would suggest fixed-domain asymptotics are useful there too.

This, it turns out, is awkward.

When is a parameter not consistently estimatable: an aside that will almost immediately become relevant

The problem with a GP with the Matérn covariance function on a fixed domain is that it’s not possible26 to estimate all of its parameters at the same time. This isn’t the case for the other asymptotic regimes, but you’ve got to dance with who you came to the dance with.

To make this more concrete, we need to think about a Gaussian process as a realisation of a function rather than as a vector of observations. Why? Because under fixed-domain asymptotics we are seeing values of the function closer and closer together until we essentially see the entire function on that domain.

Of course, this is why I wrote a long and technical blog post on understanding Gaussian processes as random functions. But don’t worry. You don’t need to have read that part.

The key thing is that because a GP is a function, we need to think of it’s probability of being in a set \(A\) of functions. There will be a set of function \(\operatorname{supp}(u)\), which we call the support of \(u(\cdot)\), that is the smallest set such that \[ \Pr(u(\cdot) \in \operatorname{supp}(u)) = 1. \] Every GP has an associated support and, while you probably don’t think much about it, GPs are obsessed with their supports. They love them. They hug them. They share them with their friends. They keep them from their enemies. And they are one of the key things that we need to think about in order to understand why it’s hard to estimate parameters in a Matérn covariance function.

There is a key theorem that is unique27 to Gaussian processes. It’s usually phrased in terms of Gaussian measures, which are just the probability associated with a GP. For example, if \(u_1(\cdot)\) is a GP then \[ \mu_1(A) = \Pr(u_1(\cdot) \in A) \] is the corresponding Gaussian measure. We can express the support of \(u(\cdot)\) as the smallest set of functions such that \(\mu(A)=1\).

Theorem 1 (Feldman-Hájek theorem) Two Gaussian measures \(\mu_1\) and \(\mu_2\) with corresponding GPs \(u_1(\cdot)\) and \(u_2(\cdot)\) on a locally convex space28 either satisfy, for every29 set \(A\),

\[

\mu_2(A) > 0 \Rightarrow \mu_1(A) > 0 \text{ and } \mu_1(A) > 0 \Rightarrow \mu_2(A) > 0,

\] in which case we say that \(\mu_1\) and \(\mu_2\) are equivalent30 (confusingly31 written \(\mu_1 \equiv \mu_2\)) and \(\operatorname{supp}(u_1) = \operatorname{supp}(u_2)\), or \[

\mu_2(A) > 0 \Rightarrow \mu_1(A) = 0 \text{ and } \mu_1(A) > 0 \Rightarrow \mu_2(A) = 0,

\] in which case we say \(\mu_1\) and \(\mu_2\) are singular (written \(\mu_1 \perp \mu_2\)) and \(u_1(\cdot)\) and \(u_2(\cdot)\) have disjoint supports.

Later on in the post, we will see some precise conditions for when two Gaussian measures are equivalent, but for now it’s worth saying that it is a very delicate property. In fact, if \(u_2(\cdot) = \alpha u_1(\cdot)\) for any \(|\alpha|\neq 1\), then32 \(\mu_1 \perp \mu_2\)!

This seems like it will cause problems. And it can33. But it’s fabulous for inference.

To see this, we can use one of the implications of singularity: \(\mu_1 \perp \mu_2\) if and only if \[ \operatorname{KL}(u_1(\cdot) || u_2(\cdot)) = \infty, \] where the the Kullback-Leibler divergence can be interpreted as the expectation of the likelihood ratio of \(u_1\) vs \(u_2\) under \(u_1\). Hence, if \(u_1(\cdot)\) and \(u_2(\cdot)\) are singular, we can (on average) choose the correct one using a likelihood ratio test. This means that we will be able to correctly recover the true34 parameter.

It turns out the opposite is also true.

Theorem 2 If \(\mu_\theta\), \(\theta \in \Theta\) is a family of Gaussian measures corresponding to the GPs \(u_\theta(\cdot)\) and \(\mu_\theta \equiv \mu_{\theta'}\) for all values of \(\theta, \theta' \in \Theta\), then there is no sequence of estimators \(\hat \theta_n\) such that, for all \(\theta_0 \in \Theta\) \[ {\Pr}_{\theta_0}(\hat \theta_n \rightarrow \theta_0) = 1, \] where \({\Pr}_{\theta_0}(\cdot)\) is the probability under data drawn with true parameter \(\theta_0\). That is, there is no estimator \(\hat \theta_n\) that is (strongly) consistent for all \(\theta \in \Theta\).

Click for a surprise (the proof. shit i spoiled the surprise)

Proof. We are going to do this by contradiction. So assume that there is a sequence such that \[ \Pr{_{\theta_0}}(\hat \theta_n \rightarrow \theta_0) = 1. \] For some \(\epsilon >0\), let \(A_n = \{\|\hat\theta_n - \theta_0\|>\epsilon\}\). Then we can re-state our almost sure convergence as \[ \Pr{_{\theta_0}}\left(\limsup_{n\rightarrow \infty}A_n\right) = 0, \] where the limit superior is defined35 as \[ \limsup_{n\rightarrow \infty}A_n = \bigcap_{n=1}^\infty \left(\bigcup_{m=n}^\infty A_n\right). \]

For any \(\theta' \neq \theta_0\) with \(\mu_{\theta'} \equiv \mu_{\theta_0}\), the definition of equivalent measures tells us that \[ \Pr{_{\theta'}}\left(\limsup_{n\rightarrow \infty}A_n\right) = 0 \] and therefore \[ \Pr{_{\theta'}}\left(\hat \theta_n \rightarrow \theta_0\right) = 1. \] The problem with this is that is that this data is generated using \(u_{\theta'}\), but the estimator converges to \(\theta_0\) instead of \(\theta'\). Hence, the estimator isn’t uniformly (strongly) consistent.

This seems bad but, you know, it’s a pretty strong version of convergence. And sometimes our brothers and sisters in Christ who are more theoretically minded like to give themselves a treat and consider weaker forms of convergence. It turns out that that’s a disaster too.

Theorem 3 If \(\mu_\theta\), \(\theta \in \Theta\) is a family of Gaussian measures corresponding to the GPs \(u_\theta(\cdot)\) and \(\mu_\theta \equiv \mu_{\theta'}\) for all values of \(\theta, \theta' \in \Theta\), then there is no sequence of estimators \(\hat \theta_n\) such that, for all \(\theta_0 \in \Theta\) and all \(\epsilon > 0\) \[ \lim_{n\rightarrow \infty}{\Pr}_{\theta_0}(\|\hat \theta_n - \theta_0\| > \epsilon) = 0. \] That is there is no estimator \(\hat \theta_n\) that is (weakly) consistent for all \(\theta \in \Theta\).

If you can’t tell the difference between these two theorems that’s ok. You probably weren’t trying to sublimate some childhood trauma and all of your sexual energy into maths just so you didn’t have to deal with the fact that you might be gay and you were pretty sure that wasn’t an option and anyway it’s not like it’s that important. Like whatever, you don’t need physical or emotional intimacy. You’ve got a pile of books on measure theory next to your bed. You are living your best life. Anyway. It makes almost no practical difference. BUT I WILL PROVE IT ANYWAY.

Once more, into the proof.

Proof. This proof is based on a kinda advanced fact, which involves every mathematician’s favourite question: what happens along a sub-sequence?

This basically says that the two modes of convergence are quite similar except convergence in probability is relaxed enough to have some36 values that aren’t doing so good at the whole converging thing.

With this in hand, let us build a contradiction. Assume that \(\hat \theta_n\) is weakly consistent for all \(\theta \in \Theta\). Then, if we generate data under \(\mu_{\theta_0}\), then we get that, along a sub-sequence \(n_k\) \[ \Pr{_{\theta_0}}(\hat \theta_{n_k} \rightarrow \theta_0) =1. \]

Now, if \(\hat \theta_n\) is weakly consistent for all \(\theta\), then so is \(\hat \theta_{n_k}\). Then, by our assumption, for every \(\theta' \in \Theta\) and every \(\epsilon>0\) \[ \lim_{k \rightarrow \infty} \Pr{_{\theta'}}\left(\|\hat \theta_{n_k} - \theta'\| > \epsilon\right) = 0. \]

Our probability fact tells us that there is a further infinite sub-sub-sequence \(n_{k_\ell}\) such that \[ \Pr{_{\theta'}}\left(\hat \theta_{n_{k_\ell}} \rightarrow \theta'\right) = 1. \] But Theorem 2 tells us that \(\hat \theta_{n_k}\) (and hence \(\theta_{n_{k_l}}\)) satisfies \[ \Pr{_{\theta'}}\left(\hat \theta_{n_{k_\ell}} \rightarrow \theta_0\right) = 1. \] This is a contradiction unless \(\theta'= \theta_0\), which proves the assertion.

Matérn fields under fixed domain asymptotics: the love that dares not speak its name

All of that lead up immediately becomes extremely relevant once we learn one thing about Gaussian processes with Matérn covariance functions.

Theorem 4 Let \(\mu_{\nu, \sigma, \ell}\) be the Gaussian measure corresponding to the GP with Matérn covariance function with parameters \((\nu, \sigma, \ell)\), let \(D\) be any finite domain in \(\mathbb{R}^d\), and let \(d \leq 3\). Then, restricted to \(D\), \[ \mu_{\nu,\sigma_1, \ell_1} \equiv \mu_{\nu, \sigma_2, \ell_2} \] if and only if \[ \frac{\sigma_1^2}{\ell_1^{2\nu}} = \frac{\sigma_2^2}{\ell_2^{2\nu}}. \]

I’ll go through the proof of this later, but the techniques require a lot of warm up, so let’s just deal with the consequences for now.

Basically, Theorem 4 says that we can’t consistently estimate the range and the marginal standard deviation for a one, two, or three dimensional Gaussian process. Hao Zhang noted this and that it remains true37 when dealing with non-Gaussian data.

The good news, I guess, is that in more than four38 dimensions the measures are always singular.

Now, I don’t give one single solitary shit about the existence of consistent estimators. I am doing Bayesian things and this post is supposed to be about setting prior distributions. But it is important. Let’s take a look at some simulations.

First up, let’s look at what happens in 2D when we directly (ie with no noise) observe a zero-mean GP with exponential covariance function (\(\nu = 1/2\)) at points in the unit square. In this case, the log-likelihood is, up to an additive constant, \[ \log p(y \mid \theta) = -\frac{1}{2}\log |\Sigma(\theta)| - \frac{1}{2}y^T\Sigma(\theta)^{-1}y. \]

The R code is not pretty but I’m trying to be relatively efficient with my Cholesky factors.

We can now simulate 500 data points on the unit square, compute their distances, and simulate from the GP.

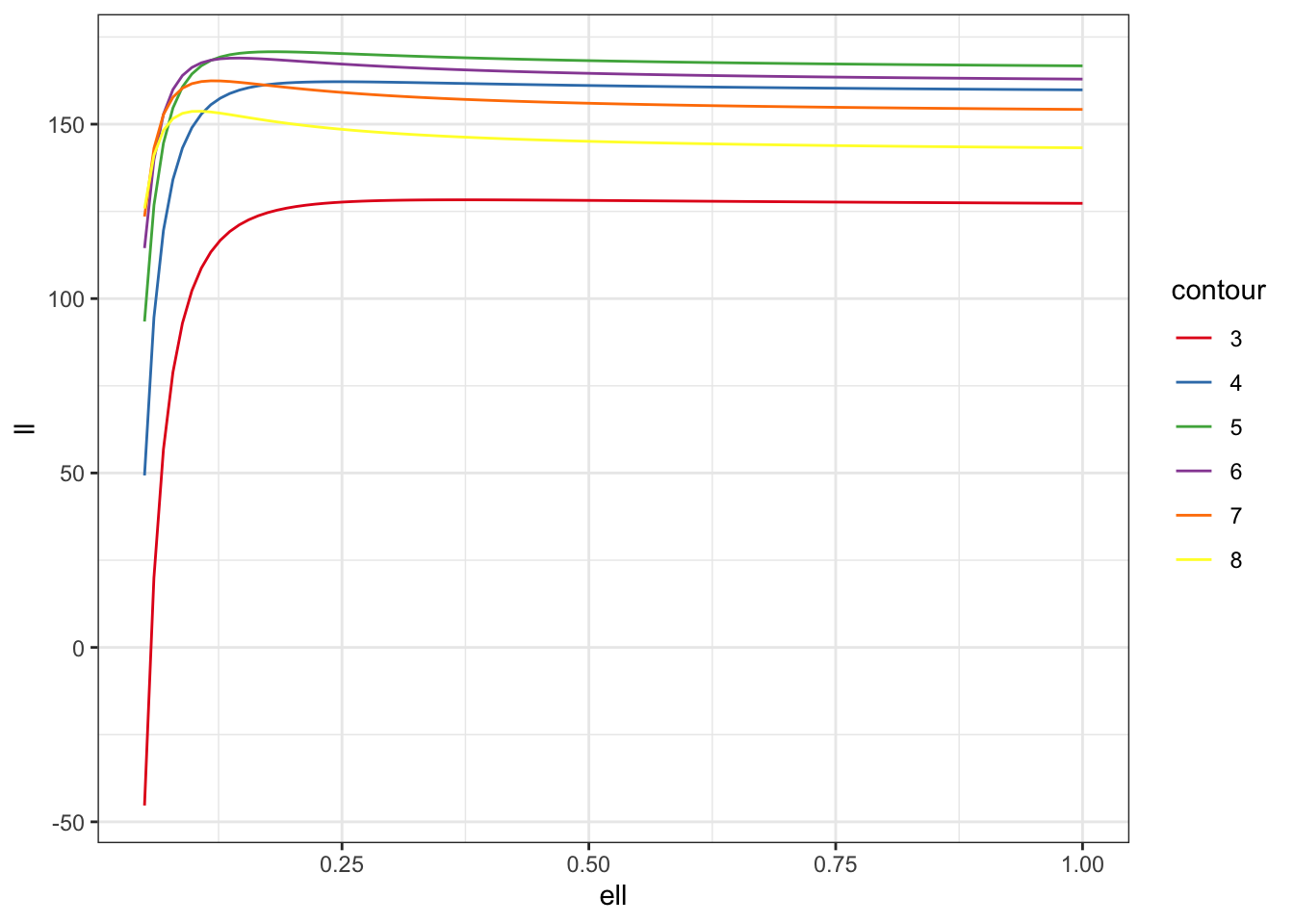

With all of this in hand, let’s look at the likelihood surface along39 the line \[

\frac{\sigma^2}{\ell} = c

\] for various values of \(c\). I’m using some purrr trickery40 here to deal with the fact that sometimes the Cholesky factorisation will throw an error.

m <- 100

f_direct <- partial(log_lik, y = dat$y, h = dat$dist_mat)

pars <- \(c) tibble(ell = seq(0.05,1, length.out = m),

sigma = sqrt(c * ell), c = rep(c, m))

ll <- map_df(3:8,pars) |>

mutate(contour = factor(c),

ll = map2_dbl(sigma, ell,

possibly(f_direct,

otherwise = NA_real_)))

ll |> ggplot(aes(ell, ll, colour = contour)) +

geom_line() +

scale_color_brewer(palette = "Set1") +

theme_bw()

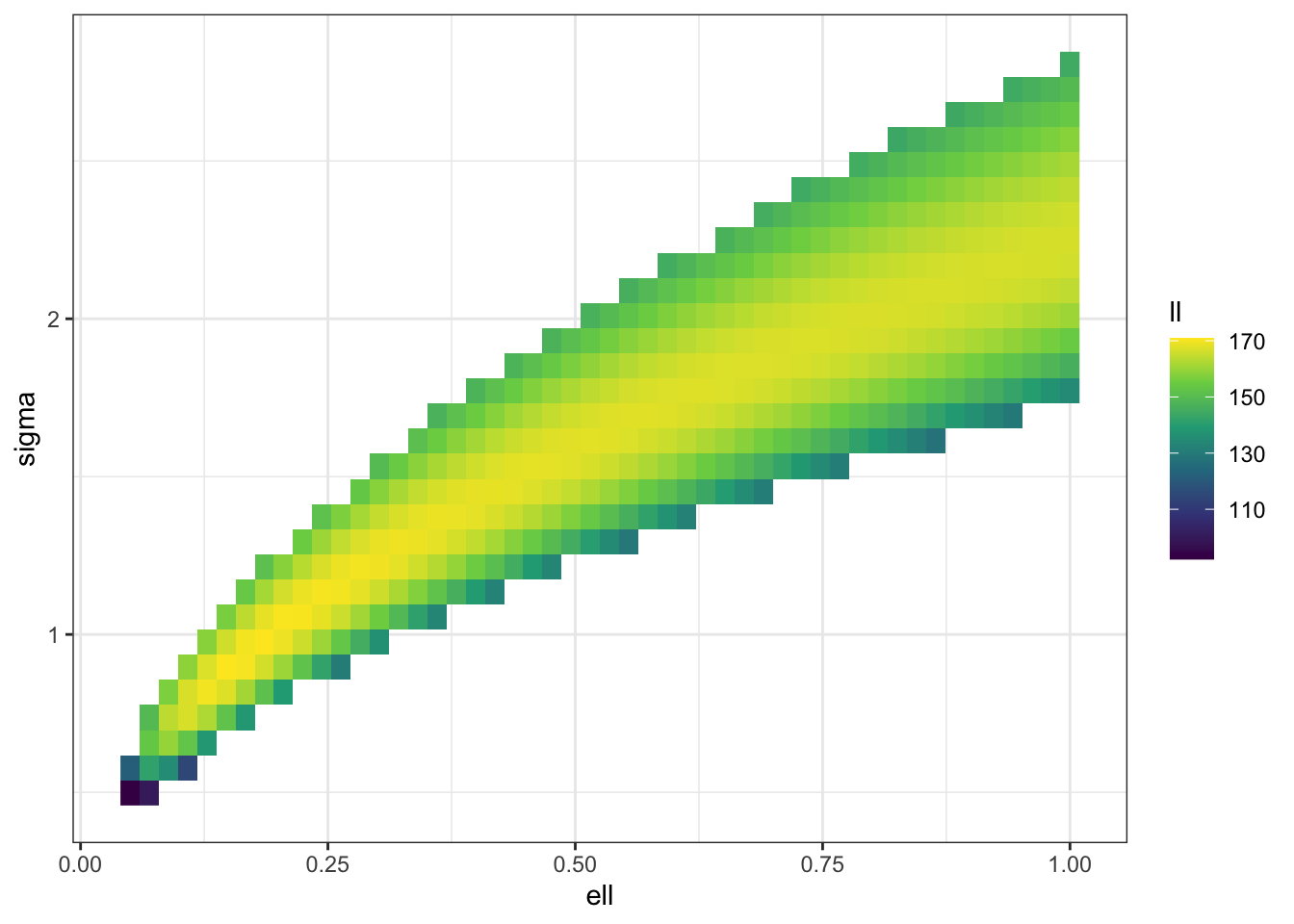

We can see the same thing in 2D (albeit at a lower resolution for computational reasons). I’m also not computing a bunch of values that I know will just be massively negative.

f_trim <- \(sigma, ell) ifelse(sigma^2 < 3*ell | sigma^2 > 8*ell,

NA_real_, f_direct(sigma, ell))

m <- 50

surf <- expand_grid(ell = seq(0.05,1,length.out = m),

sigma = seq(0.1, 4, length.out = m)) |>

mutate(ll = map2_dbl(sigma, ell,

possibly(f_trim, otherwise = NA_real_)))

surf |> filter(ll > 50) |>

ggplot(aes(ell, sigma, fill = ll)) +

geom_raster() +

scale_fill_viridis_c() +

theme_bw()

Clearly there is a ridge in the likelihood surface, which suggests that our posterior is going to be driven by the prior along that ridge.

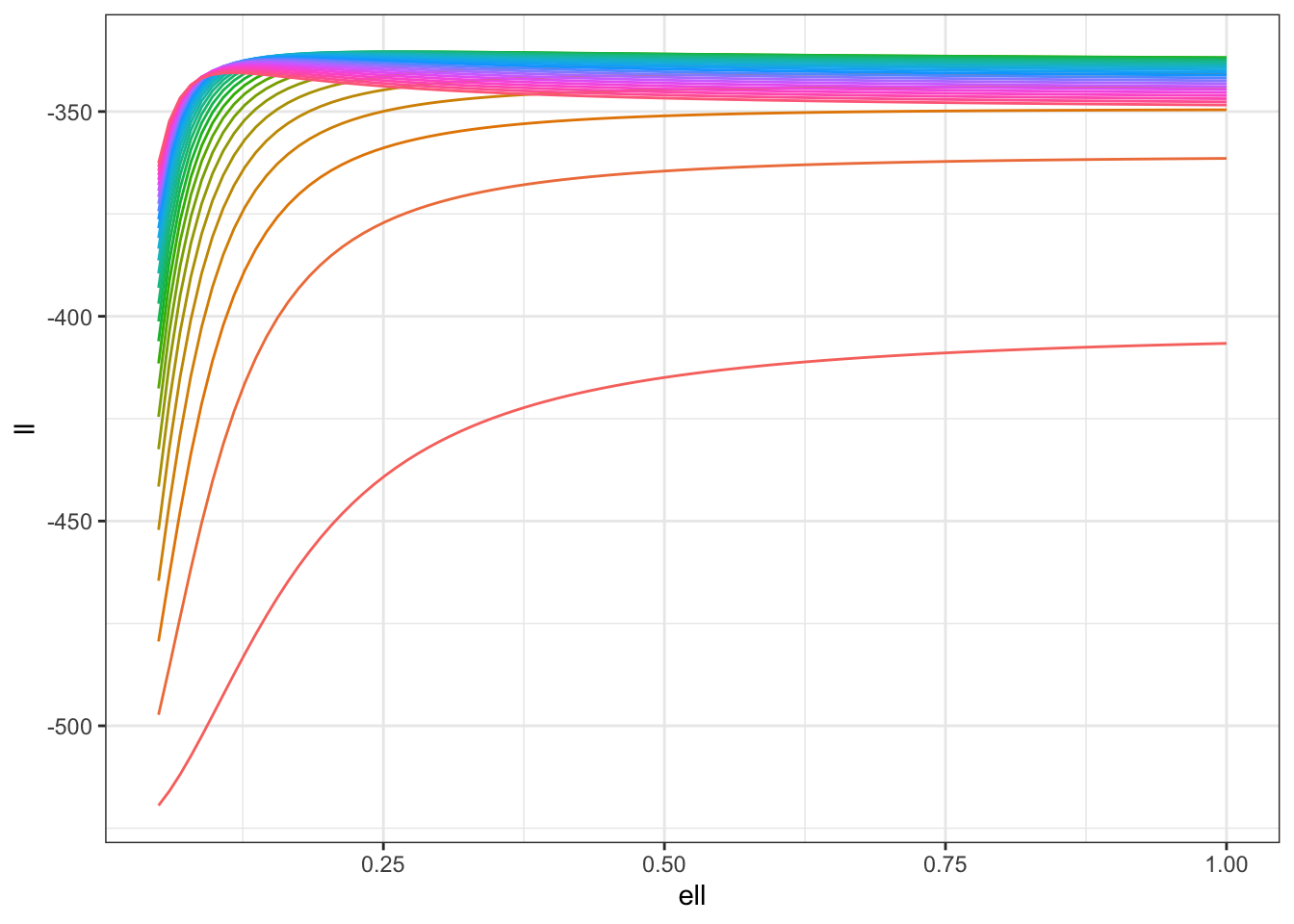

For completeness, let’s run the same experiment again when we have some known observation noise, that is \(y_i \sim N(u(s_i), 1)\). In this case, the log-likelihood is \[ \log p(y\mid \sigma, \ell) = -\frac{1}{2} \log \det(\Sigma(\theta) + I) - \frac{1}{2}y^{T}(\Sigma(\theta) + I)^{-1}y. \]

Let us do the exact same thing again!

n <- 500

dat <- tibble(s1 = runif(n), s2 = runif(n),

dist_mat = as.matrix(dist(cbind(s1,s2))),

mu = MASS::mvrnorm(mu=rep(0,n),

Sigma = cov_fun(dist_mat, 1.0, 0.2)),

y = rnorm(n, mu, 1))

log_lik <- function(sigma, ell, y, h) {

V <- cov_fun(h, sigma, ell)

R <- chol(V + diag(dim(V)[1]))

-sum(log(diag(R))) - 0.5*sum(y * backsolve(R, backsolve(R, y, transpose = TRUE)))

}

m <- 100

f <- partial(log_lik, y = dat$y, h = dat$dist_mat)

pars <- \(c) tibble(ell = seq(0.05,1, length.out = m),

sigma = sqrt(c * ell), c = rep(c, m))

ll <- map_df(seq(0.1, 10, length.out = 30),pars) |>

mutate(contour = factor(c),

ll = map2_dbl(sigma, ell,

possibly(f, otherwise = NA_real_)))

ll |> ggplot(aes(ell, ll, colour = contour)) +

geom_line(show.legend = FALSE) +

#scale_color_brewer(palette = "Set1") +

theme_bw()

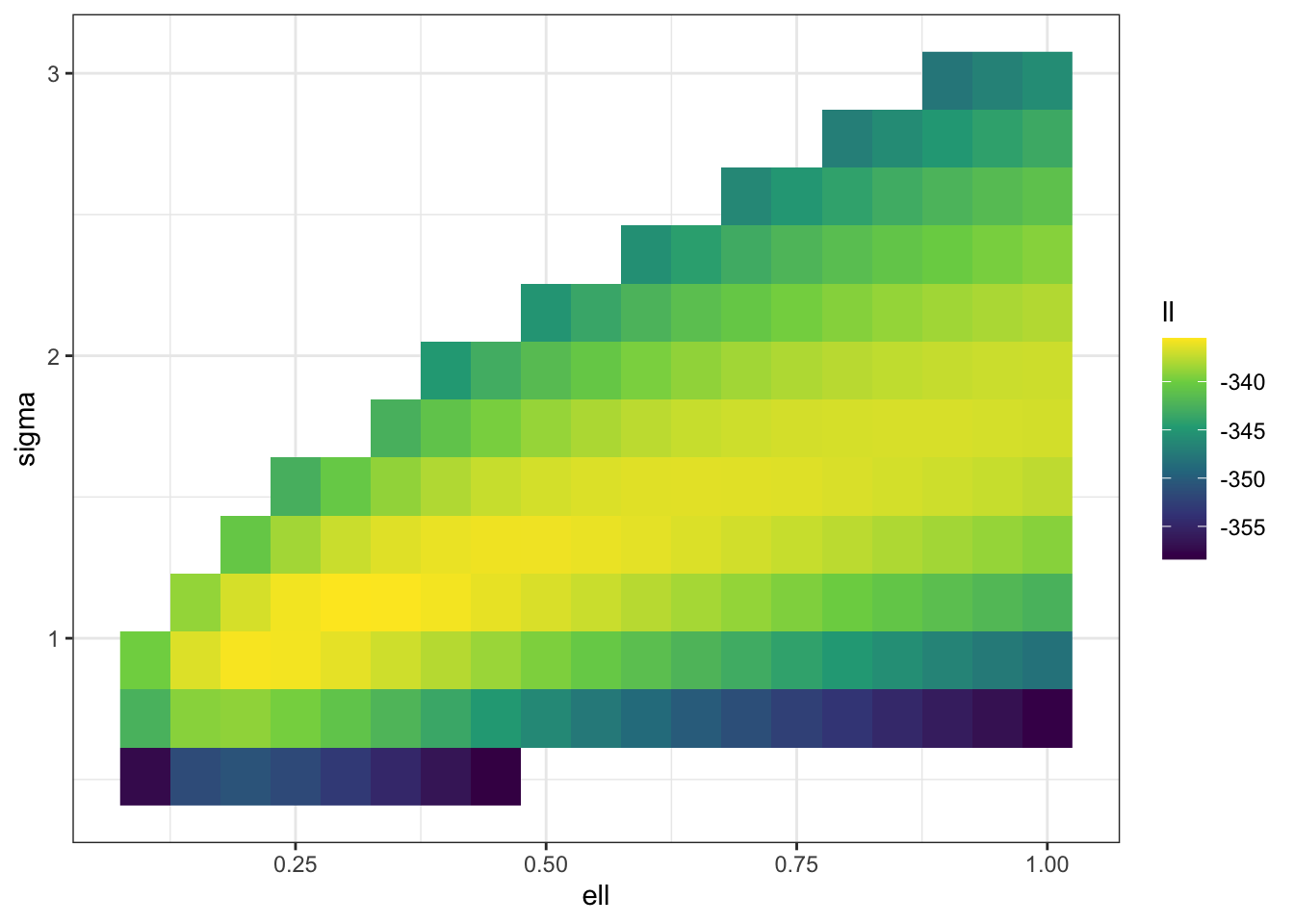

f_trim <- \(sigma, ell) ifelse(sigma^2 < 0.1*ell | sigma^2 > 10*ell,

NA_real_, f(sigma, ell))

m <- 20

surf <- expand_grid(ell = seq(0.05,1,length.out = m),

sigma = seq(0.1, 4, length.out = m)) |>

mutate(ll = map2_dbl(sigma, ell,

possibly(f_trim, otherwise = NA_real_)))

surf |> filter(ll > -360) |>

ggplot(aes(ell, sigma, fill = ll)) +

geom_raster() +

scale_fill_viridis_c() +

theme_bw()

Once again, we can see that there is going to be a ridge in the likelihood surface! It’s a bit less disastrous this time, but it’s not excellent even with 500 observations (which is a decent number on a unit square). The weird structure of the likelihood is still going to lead to a long, non-elliptical shape in your posterior that your computational engine (and your person interpreting the results) are going to have to come to terms with. In particular, if you only look at the posterior marginal distributions for \(\sigma\) and \(\ell\) you may miss the fact that \(\sigma \ell^{\nu}\) is quite well estimated by the data even though the marginals for both \(\sigma\) and \(\ell\) are very wide.

This ridge in the likelihood is going to translate somewhat into a ridge in the prior. We will see below that how much of that ridge we see is going to be very dependent on how we specify the prior. The entire purpose of the PC prior is to meaningfully resolve this ridge using sensible prior information.

But before we get to the (improved) PC prior, it’s worthwhile to survey some other priors that have been proposed in the literature.

So the prior is important then! What do other people do?

That ridge in the likelihood surface does not go away in low dimensions, which essentially means that our inference along that ridge is going to be driven by the prior.

Possibly the worst choice you could make in this situation is trying to make a minimally informative prior. Of course, that’s what somebody did when they made a reference prior for the problem. In fact it was the first paper41 that looks rigorously at prior distributions on the parameters of GPs. It’s just unfortunate that it’s quite shit. It has still been cited quite a lot. And there are some technical advances to the theory of reference priors, but if you use it you just find yourself mapping out that damn ridge.

On top of being, structurally, a bad choice, the reference prior has a few other downsides:

- It is very computationally intensive and quite complex. Not unlike the bad version of the PC prior!

- It requires strong assumptions about the likelihood. The first version assumed that there was no observation noise. Later papers allowed there to be observation noise. But only if it’s Gaussian.

- It is derived under the asymptotic regime where an infinite sequence of different independent realisations of the GP are observed at the same finite set of points. This is not the most useful regime for GPs.

All in all, it’s a bit of a casserole.

From the other end, there’s a very interesting contribution from Aad van der Vaart and Harry van Zanten wrote a very lovely theoretical paper that looked at which priors on \(\ell\) could result in theoretically optimal contraction rates for the posterior of \(u(\cdot)\). They argued that \(\ell^{-d}\) should have a Gamma distribution. Within the Matérn class, their results are only valid for the squared exponential contrivance function.

One of the stranger things that I have never fully understood is that the argument I’m going to make below ends up with a gamma distribution on \(\ell^{-d/2}\), which is somewhat different to van der Vaart and van Zanten. If I was to being forced to bullshit some justification I’d probably say something about the Matérn process depending only on the distance between observations makes the \(d\)-sphere the natural geometry (the volume of which scales like \(\ell^{-d/2}\)) rather than the \(d\)-cube (the volume of which scales lie \(\ell^{-d}\)). But that would be total bullshit. I simply have no idea. They’re proposal comes via the time-honoured tradition of “constant chasing” in some fairly tricky proofs, so I have absolutely no intuition for it.

We also found in other contexts that use the KL divergence rather than its square root tended to perform worse. So I’m kinda happy with our scaling and, really, their paper doesn’t cover the covariance functions I’m considering in this post.

Neither42 of these papers consider that ridge in the likelihood surface.

This lack of consideration—as well as their success in everything else we tried them on—was a big part of our push to make a useful version of a PC prior for Gaussian processes.

Rescuing the PC prior on \(\ell\); or What I recommend you do

It has been a long journey, but we are finally where I wanted us to be. So let’s talk about how to fix the PC prior. In particular, I’m going to go through how to derive a prior on the length scale \(\ell\) that has a simple form.

In order to solve this problem, we are going to do three things in the rest of this post:

- Restrict our attention to the stationary43 GPs

- Restrict our attention to the Matérn class of covariance functions.

- Greatly increase our mathematical44 sophistication.

But before we do that, I’m going to walk you through the punchline.

This work was originally done with the magnificent Geir-Arne Fuglstad, the glorious Finn Lindren, and the resplendent Håvard Rue. If you want to read the original paper, the preprint is here45.

The PC prior is derived using the base model \(\ell = \infty\), which might seem like a slightly weird choice. The intuition behind it is that if there is strong dependence between far away points, the realisations of \(u(\cdot)\) cannot be too wiggly. In some context people talk about \(\ell\) as a “smoothness”46 parameter because realisations with large \(\ell\) “look”47 smoother than realisations with small \(\ell\).

Another way to see the same thing is to note that a Matérn field approaches a48 smoothing spline prior, in which case \(\sigma^{-2}\) plays the role of the “smoothing parameter” of the spline. In that case, the natural base model of \(\sigma=0\) interacts with the base model of \(\ell = \infty\) to shrink towards an increasingly flat surface centred on zero.

We still need to choose a quantity of interest in order to encode some explicit information in the prior. In this case, I’m going to use the idea that for any data set, we only have information up to a certain spatial resolution. In that case, we don’t want to put prior mass on the length scale being less than that resolution. Why? Well any inference about \(\ell\) at a smaller scale than the data resolution is going to be driven entirely by unverifiable model assumptions. And that feels a bit awkward. This suggests that we chose a minimum49 length scale \(L\) and choose the scaling parameter in the PC prior so that \[ \Pr(\ell < L) < \alpha_\ell. \]

Under these assumptions, the PC prior for the length scale in a \(d\)-dimensional space is50 a Fréchet distribution51 with shape parameter \(d/2\) and scale parameter \(\lambda_\ell^{2/d}\). That is, \[ p(\ell) = \frac{d\lambda_\ell}{2} \ell^{-(d/2+1)}e^{-\lambda_{\ell}\ell^{-d/2}}, \] where we choose \(\lambda_\ell = -\log(\alpha_\ell)L^{d/2}\) to ensure that \[ \Pr(\ell < L) = e^{-\lambda L^{-d/2}} < \alpha_\ell. \]

In two dimensions, this is an inverse gamma prior, which gives rigorous justification to a commonly used prior in spatial statistics.

Comparing it with the reference prior

Ok, so let’s actually see how much of a difference using a weakly informative prior makes relative to using the reference prior.

In the interest of computational speed, I’m going to use the simplest possible model setup, \[ y \mid \sigma,\ell \sim N(0, \sigma^2 R(\ell)), \] and I’m only going to use 25 observations.

In this case52 is \[ p(\ell, \sigma) = \sigma^{-1}\left(\operatorname{tr}\left[\left(\frac{\partial R}{\partial \ell}R^{-1}\right)^2\right] - \frac{1}{n}\operatorname{tr}\left(\frac{\partial R}{\partial \ell}R^{-1}\right)^2\right)^{1/2}. \]

Even with this limited setup, it took a lot of work to make Stan sample this posterior. You’ll notice that I did a ridge-aware reparameterisation. I also had to run twice as much warm up as I ordinarily would.

The Stan code is under the fold.

Show the Stan code!

functions {

matrix cov(int N, matrix s, real ell) {

matrix[N,N] R;

row_vector[2] s1, s2;

for (i in 1:N) {

for (j in 1:N){

s1 = s[i, 1:2];

s2 = s[j, 1:2];

R[i,j] = exp(-sqrt(dot_self(s1-s2))/ell);

}

}

return 0.5 * (R + R');

}

matrix cov_diff(int N, matrix s, real ell) {

// dR /d ell = cov(N, p ,s, sigma2*|x-y|/ell^2, ell)

matrix[N,N] R;

row_vector[2] s1, s2;

for (i in 1:N) {

for (j in 1:N){

s1 = s[i, 1:2];

s2 = s[j, 1:2];

R[i,j] = sqrt(dot_self(s1-s2)) * exp(-sqrt(dot_self(s1-s2))/ell) / ell^2 ;

}

}

return 0.5 * (R + R');

}

real log_prior(int N, matrix s, real sigma2, real ell) {

matrix[N,N] R = cov(N, s, ell);

matrix[N,N] W = (cov_diff(N, s, ell)) / R;

return 0.5 * log(trace(W * W) - (1.0 / (N)) * (trace(W))^2) - log(sigma2);

}

}

data {

int<lower=0> N;

vector[N] y;

matrix[N,2] s;

}

parameters {

real<lower=0> sigma2;

real<lower=0> ell;

}

model {

{

matrix[N,N] R = cov(N, s, ell);

target += multi_normal_lpdf(y | rep_vector(0.0, N), sigma2 * R);

}

target += log_prior(N, s, sigma2, ell);

}

generated quantities {

real sigma = sqrt(sigma2);

}By comparison, the code for the PC prior is fairly simple.

functions {

matrix cov(int N, matrix s, real sigma, real ell) {

matrix[N,N] R;

row_vector[2] s1, s2;

real sigma2 = sigma * sigma;

for (i in 1:N) {

for (j in 1:N){

s1 = s[i, 1:2];

s2 = s[j, 1:2];

R[i,j] = sigma2 * exp(-sqrt(dot_self(s1-s2))/ell);

}

}

return 0.5 * (R + R');

}

}

data {

int<lower=0> N;

vector[N] y;

matrix[N,2] s;

real<lower = 0> lambda_ell;

real<lower = 0> lambda_sigma;

}

parameters {

real<lower=0> sigma;

real<lower=0> ell;

}

model {

matrix[N,N] R = cov(N, s, sigma, ell);

y ~ multi_normal(rep_vector(0.0, N), R);

sigma ~ exponential(lambda_sigma);

ell ~ frechet(1, lambda_ell); // Only in 2D

}

// generated quantities {

// real check = 0.0; // should be the same as lp__

// { // I don't want to print R!

// matrix[N,N] R = cov(N, s, sigma, ell);

// check -= 0.5* dot_product(y,(R\ y)) + 0.5 * log_determinant(R);

// check += log(sigma) - lambda_sigma * sigma;

// check += log(ell) - 2.0 * log(ell) - lambda_ell / ell;

// }

// }This is a lot easier than the code for the reference prior.

Let’s compare the results on some simulated data. Here I’m choosing \(\alpha_\ell = \alpha_\sigma = 0.05\), \(L_\ell = 0.05\), and \(U_\sigma = 5\).

library(cmdstanr)

library(posterior)

n <- 25

dat <- tibble(s1 = runif(n), s2 = runif(n),

dist_mat = as.matrix(dist(cbind(s1,s2))),

y = MASS::mvrnorm(mu=rep(0,n),

Sigma = cov_fun(dist_mat, 1.0, 0.2)))

stan_dat <- list(y = dat$y,

s = cbind(dat$s1,dat$s2),

N = n,

lambda_ell = -log(0.05)*sqrt(0.05),

lambda_sigma = -log(0.05)/5)

mod_ref <- cmdstan_model("gp_ref_no_mean.stan")

mod_pc <- cmdstan_model("gp_pc_no_mean.stan")First off, let’s look at the parameter estimates from the reference prior

fit_ref <- mod_ref$sample(data = stan_dat,

seed = 30127,

parallel_chains = 4,

iter_warmup = 2000,

iter_sampling = 2000,

refresh = 0) Running MCMC with 4 parallel chains...

Chain 1 finished in 41.6 seconds.

Chain 2 finished in 43.4 seconds.

Chain 4 finished in 44.8 seconds.

Chain 3 finished in 47.0 seconds.

All 4 chains finished successfully.

Mean chain execution time: 44.2 seconds.

Total execution time: 47.2 seconds. variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

lp__ -30.95 -30.57 1.24 0.89 -33.46 -29.79 1.00 1397 896

sigma2 32.56 1.28 823.19 0.58 0.69 7.19 1.00 979 562

ell 9.04 0.26 240.39 0.16 0.11 1.88 1.00 927 542

sigma 1.67 1.13 5.46 0.27 0.83 2.68 1.00 979 562It also took a bloody long time.

Now let’s check in with the PC prior.

fit_pc <- mod_pc$sample(data = stan_dat,

seed = 30127,

parallel_chains = 4,

iter_sampling = 2000,

refresh = 0) Running MCMC with 4 parallel chains...

Chain 1 finished in 4.9 seconds.

Chain 4 finished in 5.1 seconds.

Chain 3 finished in 5.4 seconds.

Chain 2 finished in 5.5 seconds.

All 4 chains finished successfully.

Mean chain execution time: 5.2 seconds.

Total execution time: 5.6 seconds. variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

lp__ -10.36 -10.05 1.02 0.76 -12.42 -9.36 1.00 2160 3228

sigma 1.52 1.36 0.60 0.41 0.92 2.72 1.00 1424 1853

ell 0.67 0.45 0.72 0.27 0.19 1.89 1.00 1338 1694You’ll notice two things there: it did a much better job at sampling and it was much faster.

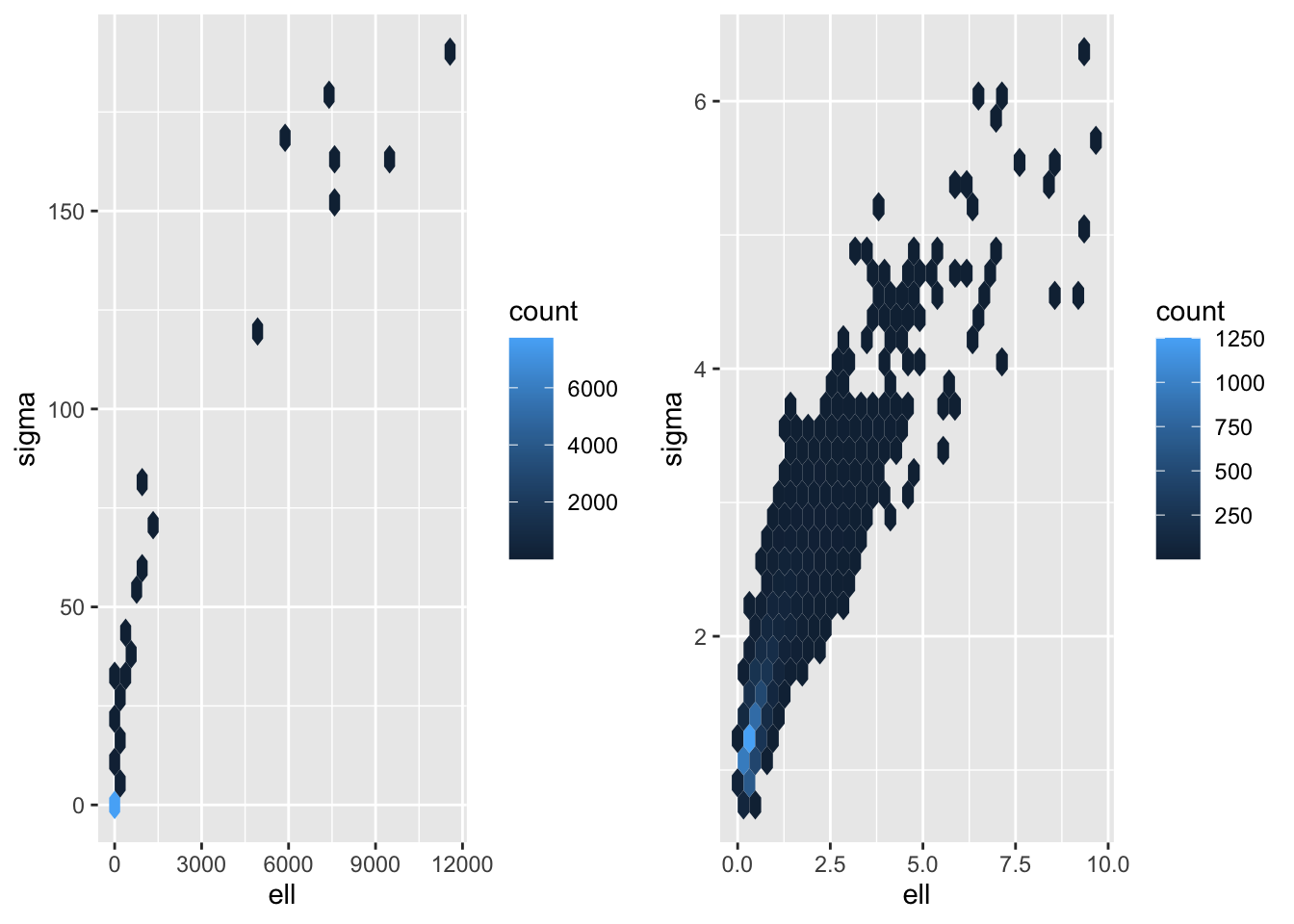

Finally, let’s look at some plots. First off, let’s look at some 2D density plots.

library(cowplot)

samps_ref <- fit_ref$draws(format = "draws_df")

samps_pc <- fit_pc$draws(format = "draws_df")

p1 <- samps_ref |> ggplot(aes(ell, sigma)) +

geom_hex() +

scale_color_viridis_c()

p2 <- samps_pc |> ggplot(aes(ell, sigma)) +

geom_hex() +

scale_color_viridis_c()

plot_grid(p1,p2)

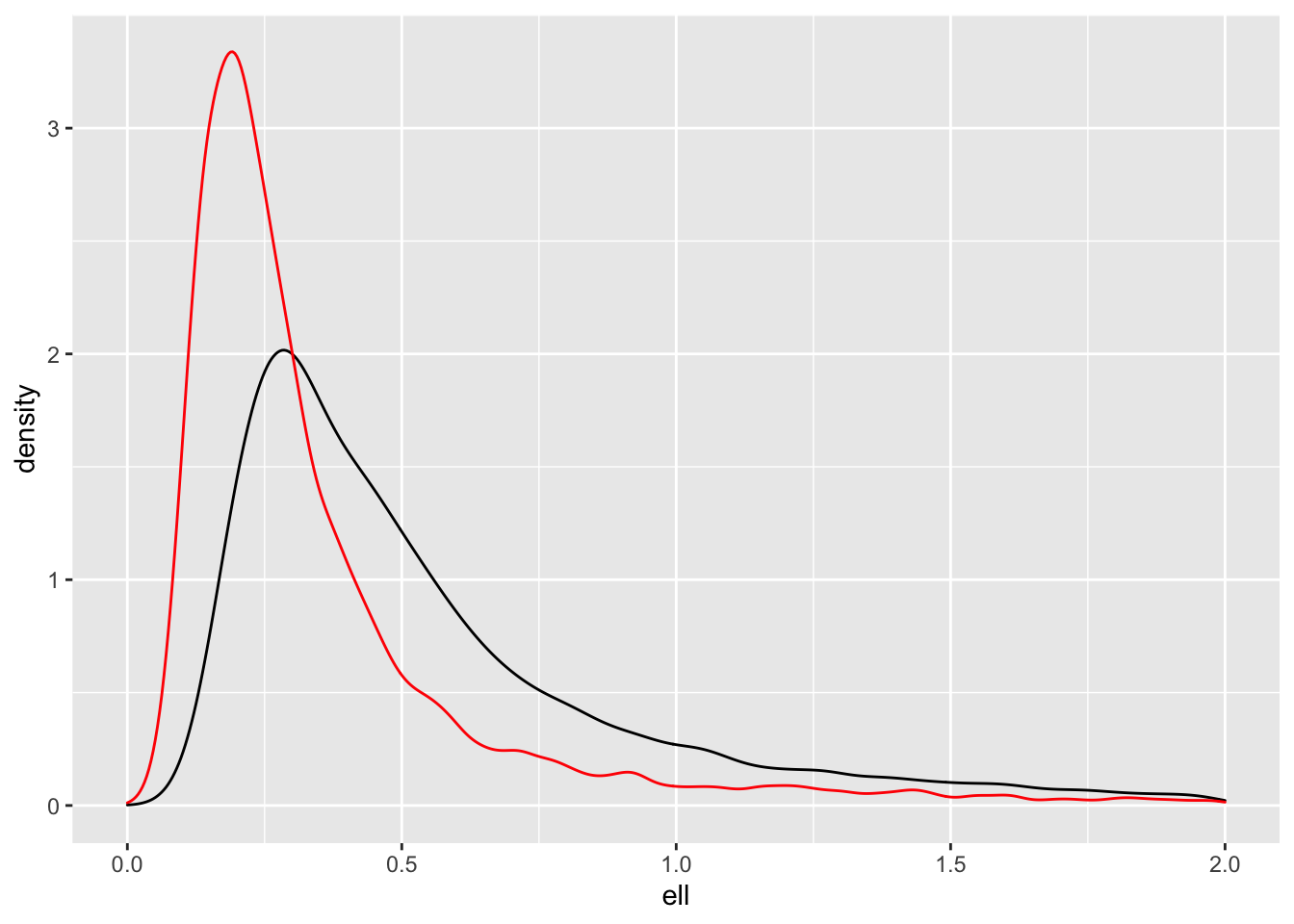

It would be interesting to look at how different the densities for \(\ell\) are.

samps_pc |> ggplot(aes(ell)) +

geom_density() +

geom_density(aes(samps_ref$ell), colour = "red") +

xlim(0,2)

As expected, the PC prior (black) pulls the posterior towards the base model (\(\ell = \infty\)), but what is interesting to me is that the posterior for the reference prior (red) has so much mass near zero. That’s the one thing we didn’t want to happen.

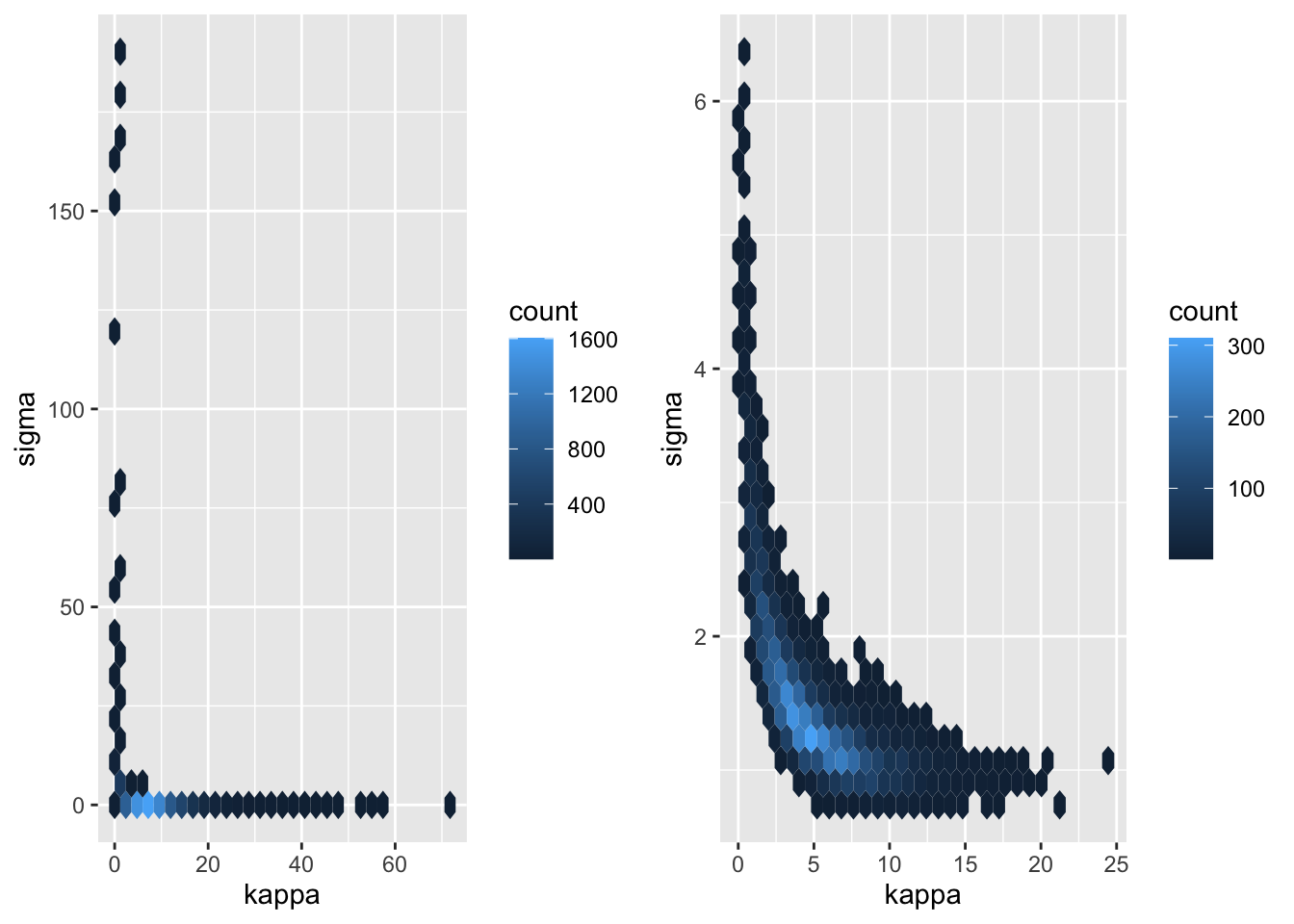

We can look closer at this by looking at the posterior for \(\kappa = 2\ell^{-1}\).

p3 <- samps_ref |>

mutate(kappa = 2/ell) |>

ggplot(aes(kappa, sigma)) +

geom_hex() +

scale_color_viridis_c()

p4 <- samps_pc |>

mutate(kappa = 2/ell) |>

ggplot(aes(kappa, sigma)) +

geom_hex() +

scale_color_viridis_c()

plot_grid(p3, p4)

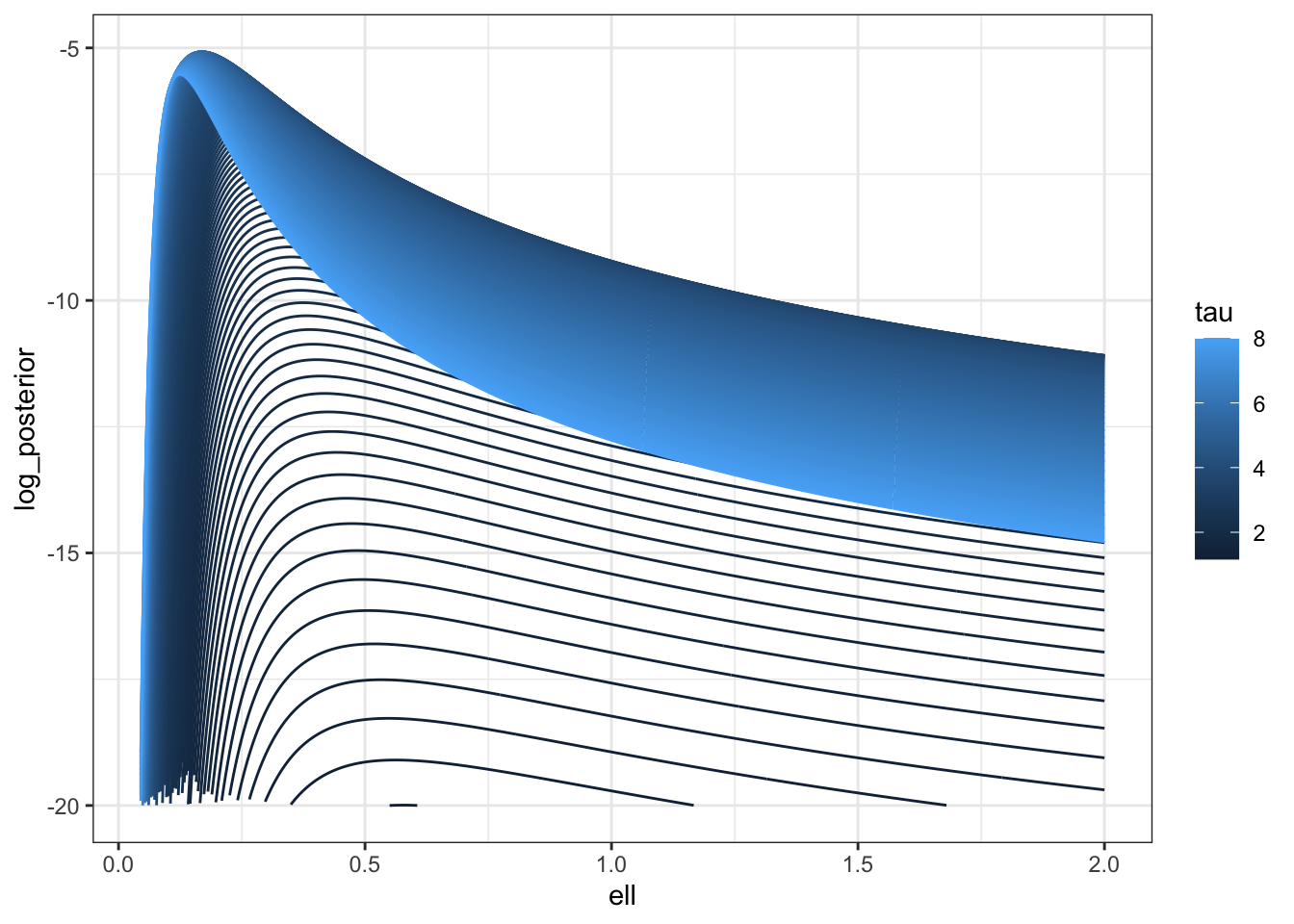

To be brutally francis with you all, I’m not sure how much I trust that Stan posterior, so I’m going to look at the posterior along the ridge.

log_prior <- function(sigma, ell) {

V <- cov_fun(dat$dist_mat, sigma, ell)

dV <- (V * dat$dist_mat)/ell^2

U <- t(solve(V, dV))

lprior <- 0.5 * log(sum(diag(U %*% U)) - sum(diag(U))^2/n) - log(sigma)

}

log_posterior <- \(sigma, ell) log_prior(sigma, ell) + f_direct(sigma, ell)

m <- 500

pars <- \(c) tibble(ell = seq(0.001,2, length.out = m),

sigma = sqrt(c * ell), c = rep(c, m))

lpost <- map_df(seq(0.001, 8, length.out = 200),pars) |>

mutate(tau = c,

log_posterior = map2_dbl(sigma, ell,

possibly(log_posterior, otherwise = NA_real_)))

lpost |>

filter(log_posterior > -20) |>

ggplot(aes(ell, log_posterior, colour = tau, group = tau)) +

geom_line() +

#scale_color_brewer(palette = "Set1") +

theme_bw()

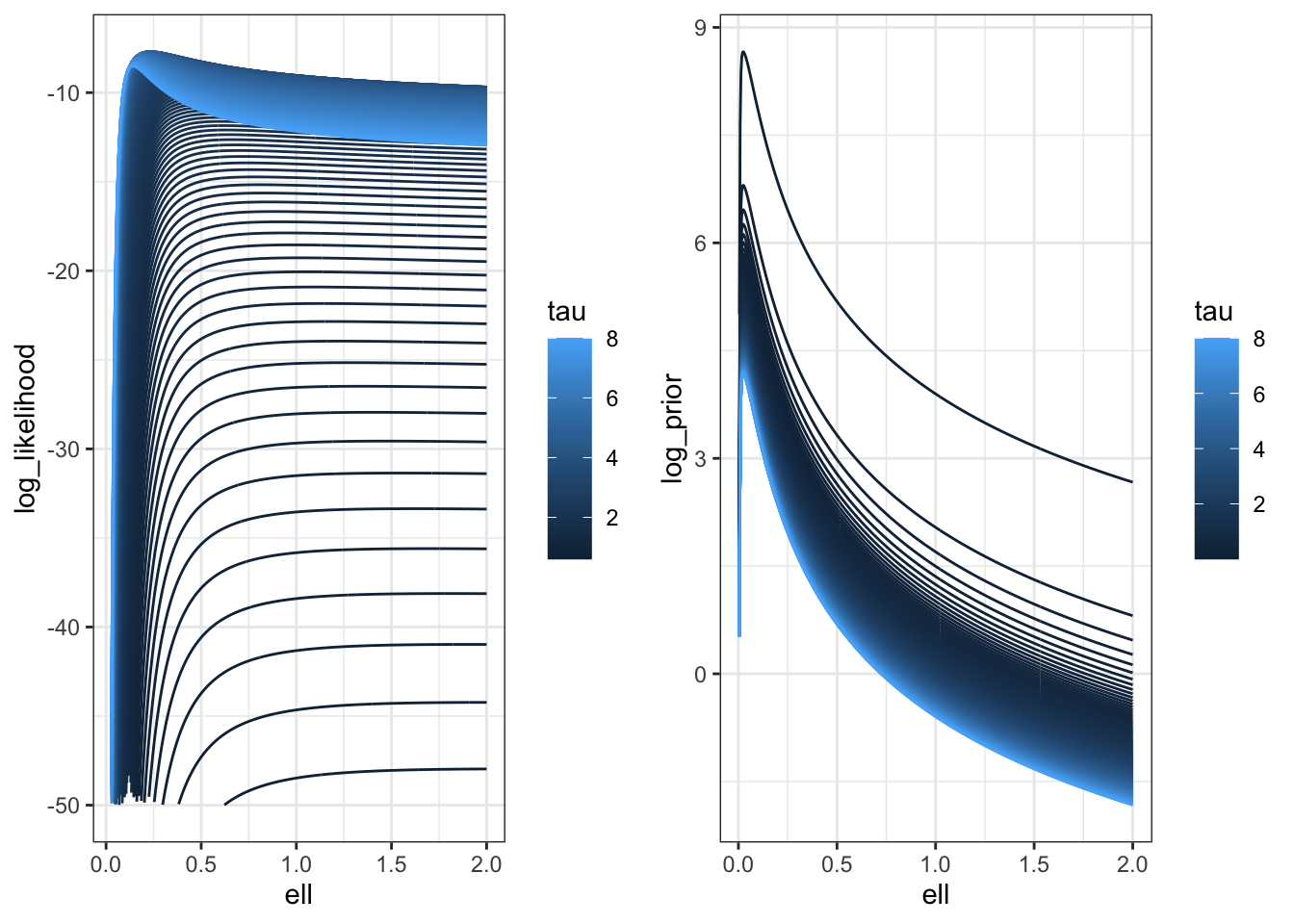

We can compare this with the likelihood surface.

llik <- map_df(seq(0.001, 8, length.out = 200),pars) |>

mutate(tau = c,

log_likelihood = map2_dbl(sigma, ell,

possibly(f_direct, otherwise = NA_real_)))

lprior <- map_df(seq(0.001, 8, length.out = 200),pars) |>

mutate(tau = c,

log_prior = map2_dbl(sigma, ell,

possibly(log_prior, otherwise = NA_real_)))

p1 <- llik |>

filter(log_likelihood > -50) |>

ggplot(aes(ell, log_likelihood, colour = tau, group = tau)) +

geom_line() +

#scale_color_brewer(palette = "Set1") +

theme_bw()

p2 <- lprior |>

filter(log_prior > -20) |>

ggplot(aes(ell, log_prior, colour = tau, group = tau)) +

geom_line() +

#scale_color_brewer(palette = "Set1") +

theme_bw()

plot_grid(p1, p2)

You can see here that the prior is putting a lot of weight at zero relative to the likelihood surface, which is relatively flat.

It’s also important to notice that the ridge isn’t as flat with \(n=25\) as it is with \(n=500\). It would be very interesting to repeat this with larger values of \(n\), but frankly I do not have the time.

Moving beyond the Matérn

There is a lot more to say on this topic. But honestly this blog post is already enormous (you are a bit over halfway if you choose to read the technical guff). So I’m just going to summarise some of the things that I think are important here.

Firstly, the rigorous construction of the PC prior only makes sense when \(d \leq 3\). This is a bit annoying, but it is what it is. I would argue that this construction is still fairly reasonable in moderate dimensions. (In high dimensions I think we need more research.)

There are two ways to see that. Firstly, if you look at the derivation of the distance, it involves an infinite sum that only converges when \(d < 4\). But mathematically, if we can show53 that the partial sums can be bounded independently of \(\ell\), then we can just send another thing to infinity when we send the domain size and the base model length scale there.

A different way is to see this is to note that the PC prior distance is \(d(\ell) = \ell^{-d/2}\). This is proportional to the inverse of the volume of the \(d\)-sphere54 of radius \(\ell\). This doesn’t seem like a massively useful observation, but just wait.

What if we ask ourselves “what is the average variance of \(u(s)\) over a ball of radius \(r\)?”. If we write \(c_{\ell,\sigma}(h)\) as the Matérn covariance function, then55 \[ \operatorname{Var}\left(\frac{1}{\operatorname{Vol}(\mathbb{B}_d(r))}\int_{\mathbb{B}_d(r)}u(s)\,ds\right) = \frac{1}{\operatorname{Vol}(\mathbb{B}_d(r))} \int_0^\infty \tilde{c}_{\ell, \sigma}(t) t^{d-1}\,dt, \] where \(\tilde c_{\ell, \sigma}(t) = c_{\ell, \sigma}(h)\) for all \(\|h\| = t\). If we remember that \(c_{\ell, \sigma}(s) = c_{1, \sigma}(\ell s)\), then we can write this as \[ \frac{1}{\operatorname{Vol}(\mathbb{B}_d(r))} \int_0^\infty \tilde{c}_{1, \sigma}(\ell t) t^{d-1}\,dt = \frac{\ell^{-d}}{\operatorname{Vol}(\mathbb{B}_d(r))} \int_0^\infty \tilde{c}_{1, \sigma}(v) v^{d-1}\,dv. \] Hence the PC prior on \(\ell\) is penalising the change in average standard deviation over a ball relative to the unit length scale. With this interpretation, the base model is, once again, zero standard deviation. This reasoning carries over to the length scale parameter in any56 Gaussian process.

This post only covers the simplest version of Matérn GPs. One simple extension is to construct a non-stationary GP by replacing the Euclidean distance with the distance on a manifold with volume element \(R(s)\,ds\). This might seem like a weird and abstract thing to do, but it’s an intrinsic specification of the popular deformation method due to Guttorp and Samson. Our paper covers the prior specification in this case.

The other common case that I’ve not considered here is the extension where there is a different length scale57 in each dimension. In this case, we could compute a PC prior independently for each dimension (so \(d=1\) for each prior). To be completely honest with you, I worry a little bit about that choice in high dimensions58 (products of independent priors being notoriously weird), but I don’t have a better suggestion.

What’s in the rest of the post?

So you might have noticed that even though the previous section is a “conclusion” section, there is quite a bit more blog to go. I shan’t lie: this whole thing up to this point is a tl;dr that got wildly out of control.

The rest of the post is the details.

There are two parts. The first part covers enough59 of the theory of stationary GPs to allow us to understand the second part, which actually derives the PC prior.

It’s going to get a bit hairy and I’m going to assume you’ve at least skimmed through the first 2 definitions in my previous post defining GPs.

I fully expect that most people will want to stop reading here. But you shouldn’t. Because if I had to suffer you all have to suffer.

Part 2: An invitation to the theory of Stationary Gaussian processes

Gaussian processes with the Matérn covariance function are an excellent example of a stationary60 Gaussian process, which are characterised61 62 by have covariance functions of the form \[ c(s, s') = c(s- s'), \] where I am abusing notation and using \(c\) for both the two parameter and one parameter functions. This assumption means that the correlation structure does not depend on where you are in space, only on the distance between points.

The assumption of stationarity massively simplifies GPs. Firstly, the stationarity assumption greatly reduces the number of parameters you need to describe a GP as we don’t need to worry about location-specific parameters. Secondly, it increases the statistical power of the data. If two subsets of the domain are more than \(2\ell\) apart, they are essentially independent replicates of the GP with the same parameters. This means that if the locations \(s\) vary across a large enough area (relative to the natural length scale), we get multiple effective replicates63 from the same realisation of the process.

In practice, stationarity64 is often a good enough assumption when the mean has been modelled carefully, especially given the limitations of the data. That said, priors on non-stationary processes can be set using the PC prior methodology by using a stationary process as the base model. The supplementary material of our paper gives a simple, but useful, example of this.

Stationary covariance functions and Bochner’s theorem

The restriction to stationary processes is extremely powerful. It opens us up to using Fourier analysis as a potent tool for understanding GPs. We are going to need this to construct our KL divergence, and so with some trepidation, let’s dive into the moonee ponds of spectral representations.

The first thing that we need to do is remember what a Fourier transform is. A Fourier transform of a square integrable function \(\phi(s)\) is65 \[ \hat \phi(\omega) = \mathcal{F}(\phi)(\omega) =\frac{1}{(2\pi)^d}\int_{\mathbb{R}^d} e^{-i\omega^Ts}\phi(s) \,ds. \]

If you have bad memories66 of desperately trying to compute Fourier integrals in undergrad, I promise you that we are not doing that today. We are simply affirming their right to exist (and my right to look them up in a table).

The reason I care about Fourier67 transforms is that if I have a non-negative measure68 \(\nu\), I can define a function \[ c(h) = \int_{\mathbb{R}^d}e^{i\omega^Th}\,d\nu(\omega). \] If measures freak you out, you can—with some loss of generality—assume that there is a function \(f(\omega)\geq 0\) such that \[ c(h) = \int_{\mathbb{R}^d}e^{i\omega^Th}f(\omega)\,d\omega. \] We are going to call \(\nu\) the spectral measure and the corresponding \(f\), if it exists, is called the spectral density.

I put it to you that, defined this way, \(c(s,s') = c(s - s')\) is a (complex) positive definite function.

Recall69 that a function is positive definite if, for every for every \(k>0\), every \(s_1, \ldots, s_k \in \mathbb{R}^d\), and every \(a_1, \ldots, a_k \in \mathbb{C}\) \[ \sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j c(s_i, s_j) \geq 0, \] where \(\bar a\) is the complex conjugate of \(a\).

Using our assumption about \(c(\cdot)\) we can write the left hand side as \[\begin{align*} \sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j c(s_i, s_j) &= \sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j c(s_i- s_j) \\ &=\sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j \int_{\mathbb{R}^d} e^{i\omega^T(s_i-s_j)}\,d\nu(\omega) \\ &=\int_{\mathbb{R}^d}\sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j e^{i\omega^T(s_i-s_j)}\,d\nu(\omega) \\ &=\int_{\mathbb{R}^d}\left(\sum_{i = 1}^k a_i e^{i\omega^Ts_i}\right)\left(\sum_{j = 1}^k \bar{a_j} e^{-i\omega^Ts_j}\right) \,d\nu(\omega)\\ &=\int_{\mathbb{R}^d}\left(\sum_{i = 1}^k a_i e^{i\omega^Ts_i}\right)\overline{\left(\sum_{j = 1}^k a_j e^{i\omega^Ts_j}\right)} \,d\nu(\omega) \\ &=\int_{\mathbb{R}^d}\left|\sum_{i = 1}^k a_i e^{i\omega^Ts_i}\right|^2\,d\nu(\omega) \geq 0, \end{align*}\] where \(|a|^2 = a\bar{a}\).

We have shown that if \(c(s,s') = c(s-s') = \int e^{i\omega^T(s-s')}\,d \nu(\omega)\) , then it is a valid covariance function. This is also true, although much harder to prove, in the other direction and the result is known as Bochner’s theorem.

Theorem 5 (Bochner’s theorem) A function \(c(\cdot)\) is positive definite, ie for every \(k>0\), every \(s_1, \ldots, s_k \in \mathbb{R}^d\), and every \(a_1, \ldots, a_k \in \mathbb{C}\) \[ \sum_{i = 1}^k\sum_{j=1}^k a_i\bar{a}_j c(s_i- s_j) \geq 0, \] if and only if there is a non-negative finite measure \(\nu\) such that \[ c(h) = \int_{\mathbb{R}^d} e^{i\omega^Th}\,d\nu(\omega). \]

Just as a covariance function70 is enough to completely specify a zero-mean Gaussian process, a spectral measure is enough to completely specify a zero mean stationary Gaussian process.

Our lives are mathematically much easier when \(\nu\) represents a density \(f(\omega)\) that satisfies \[ \int_{\mathbb{R}^d}\phi(\omega)\,d\nu(\omega) = \int_{\mathbb{R}^d}\phi(\omega)f(\omega)\,d\omega. \] This function, when it exists, is precisely the Fourier transform of \(c(h)\). Unfortunately, this will not exist71 for all possible positive definite functions. But as we drift further and further down this post, we will begin to assume that we’re only dealing with cases where \(f\) exists.

The case of particular interest to us is the Matérn covariance function. The parameterisation used above is really lovely, but for mathematical convenience, we are going to set72 \(\kappa = \sqrt{8\nu}\ell^{-1}\), which has73 Fourier transform \[\begin{align*} f(\omega) &= \frac{\Gamma(\nu+d/2)\kappa^{2\nu}\sigma^2}{4^{d}\pi^{d/2}\Gamma(\nu)}\frac{1}{(\kappa^2 + \|\omega\|^2)^{\nu+d/2}}\\ &= C_\text{Matérn}(\nu,d).\kappa^{2\nu}\sigma^2 \frac{1}{(\kappa^2 + \|\omega\|^2)^{\nu+d/2}}, \end{align*}\] where \(C_\text{Matérn}(\nu,d)\) is defined implicitly above and is a constant (as we are keeping \(\nu\) fixed).

Spectral representations (and the simplest of the many many versions of a stochastic integral)

To see this, we need a tiny bit of machinery. Specifically, we need the concept of a Gaussian \(\nu\)-noise and its corresponding integral.

Definition 1 (Complex \(\nu\)-noise) A (complex) \(\nu\)-noise74 is a random measure75 \(Z_\nu(\cdot)\) such that, for every76 disjoint77 pair of sets \(A, B\) satisfies the following properties

- \(Z_\nu(A)\) has mean zero and variance \(\nu(A)\),

- If \(A\) and \(B\) are disjoint then \(Z_\nu(A\cup B) = Z_\nu(A) + Z_\nu(B)\)

- If \(A\) and \(B\) are disjoint then \(Z_\nu(A)\) and \(Z_\nu(B)\) are uncorrelated78, ie \(\mathbb{E}(Z_\nu(A) \overline{Z_\nu(B)}) = 0\).

This definition might not seem like much, but imagine a simple79 piecewise constant function \[ f(\omega) = \sum_{i=1}^{n} f_i 1_{A_i}(\omega),\quad g(\omega) = \sum_{i=1}^{n} g_i 1_{A_i}(\omega) \] where \(f_i, g_i\in \mathbb{C}\) and the sets \(A_i\) are pairwise disjoint and \(\bigcup_{i=1}^n A_i = \mathbb{R}^d\). Then we can define an integral with respect to the \(\nu\)-noise as \[ \int_{\mathbb{R}^d} f(\omega)\,dZ_\nu(\omega) = \sum_{i=1}^n f_i Z_\nu(A_i), \] which has mean \(0\) and variance \[ \mathbb{E}\left(\int_{\mathbb{R}^d} f(\omega)\,dZ_\nu(\omega)\right)^2 = \sum_{i=1}^n f_i^2 \nu(A_i) = \int_{\mathbb{R}^d}f(\omega)^2\,d\nu(\omega), \] where the first equality comes from noting that \(\int_{A_i} \,dZ_v(\omega)\) and \(\int_{A_j} \, dZ_v(\omega)\) are uncorrelated and the last equality comes from the definition of an integral of a piecewise constant function.

Moreover, we get the covariance \[\begin{align*} \mathbb{E}\left(\int_{\mathbb{R}^d} f(\omega)\,dZ_\nu(\omega)\overline{\int_{\mathbb{R}^d} g(\omega)\,dZ_\nu(\omega)}\right) &= \sum_{i=1}^n \sum_{j=1}^n f_i g_j \nu(A_i \cap A_j) \\ &= \sum_{i=1}^n f_i\overline{g}_i \nu(A_i) \\ &= \int_{\mathbb{R}^d}f(\omega)\overline{g(\omega)}\,d\nu(\omega). \end{align*}\]

A nice thing is that while these piecewise constant functions are quite simple, we can approximate any80 function arbitrarily well by a simple function. This is the same fact we use to build ourselves ordinary81 integrals.

In particular, the brave and the bold among you might just say “we can take limits here and define” an integral with respect to the \(\nu\)-noise this way. And, indeed, that works. You get that, for any \(f\in L^2(\nu)\),

\[ \mathbb{E}\left(\int_{\mathbb{R}^d} f(\omega)\,d Z_\nu(\omega)\right) = 0 \] and, for any \(f,g \in L^2(\nu)\), \[ \mathbb{E}\left(\int_{\mathbb{R}^d} f(\omega)\,d Z_\nu(\omega)\overline{\int_{\mathbb{R}^d} g(\omega)\,d Z_\nu(\omega)}\right) = \int_{\mathbb{R}^d} f(\omega)\overline{g(\omega)}\,d \nu(\omega). \]

If we define \[ u(s) = \int_{\mathbb{R}^d}e^{i\omega^Ts}\,dZ_\nu(\omega), \] then it follows immediately that \(u(s)\) is mean zero and has covariance function \[ \mathbb{E}(u(s)\overline{u(s')}) = \int_{\mathbb{R}^d}e^{i\omega^T(s - s')}\, d\nu(\omega) = c(s-s'). \] That is \(\nu\) is the spectral measure associated with the correlation function.

Combining this with Bochner’s theorem, we have just proved82 the spectral representation theorem for general83 (weakly) stationary84 random fields85.

Theorem 6 (Spectral representation theorem) If \(\nu\) is a finite, non-negative measure on \(\mathbb{R}^d\) and \(W\) is a complex \(\nu\)-noise, then the complex-valued process \[ u(s) =\int_{\mathbb{R}^d}e^{i\omega^Ts}\,dZ_\nu(\omega) \] has mean zero an covariance \[ c(s,s') = \int_{\mathbb{R}^d}e^{i\omega^T(s-s')}\,d\nu(\omega) \] and is therefore weakly stationary. If \(Z_\nu(A) \sim N(0, \nu(A))\) then \(u(s)\) is a Gaussian process.

Furthermore, every mean-square continuous mean zero stationary Gaussian process with covariance function \(c(s,s')= c(s-s')\) and corresponding spectral measure \(\nu\) has an associated \(\nu\)-noise \(Z_\nu(\cdot)\) such that \[ u(s) =\int_{\mathbb{R}^d}e^{i\omega^Ts}\,dZ_\nu(\omega) \] holds in the mean-square sense for all \(s \in \mathbb{R}^d\).

\(Z_\nu(\cdot)\) is called the spectral process 86 associated with \(u(\cdot)\). When it exists, the density of \(\nu\), denoted by \(f(\omega)\), is called the spectral density or the power spectrum.

All throughout here I used complex numbers and complex Gaussian processes because, believe it or not, it makes things easier. But you will be pleased to know that \(u(\cdot)\) will be real-valued as long as the spectral density \(f(\omega)\) is symmetric around the origin. And it always is.

The Cameron-Martin87 space of a stationary Gaussian process

One particular advantage of stationary processes is that we get a straightforward characterization of the Cameron-Martin space inner product. Recall that the Cameron-Martin space (or reproducing kernel Hilbert space) associated with a Gaussian process is the88 space of all functions of the form \[ h(s) = \sum_{k=1}^K c_k c(s, s_k), \] where \(K\) is finite, \(c_k\) are real, and \(s_k\) are distinct points in \(\mathbb{R}^d\). This is the space that the posterior mean for GP regression lives in.

The inner product associated with this space can be written in terms of the spectral density \(f\) as89 \[ \langle h, h'\rangle = \int_{\mathbb{R}^d} \hat h(\omega) \overline{\hat {h'}(\omega)} \frac{1}{f(\omega)}\,d\omega. \] In particular, for a Matérn Gaussian process, the corresponding norm is \[ \| h\|_{H_u} = C_\text{Matérn}\kappa^{2\nu}\sigma^2 \int_{\mathbb{R}^d}|\hat h(\omega)|^2 (\kappa^2 + \|\omega\|^2)^{\nu+d/2}\,d\omega. \] For those of you familiar with function spaces, this is equivalent to the norm on \(H^{\nu+d/2}(\mathbb{R}^d)\). One way to interpret this is that the set of functions in the Cameron-Martin space for a Matérn GP only depends on \(\nu\), while the norm and inner product (and hence the posterior mean and all that stuff) depend on \(\nu\), \(\kappa\), and \(\sigma\). This observation is going to be important.

Another look at equivalence and singularity

It would’ve been a bit of an odd choice to spend all this time talking about spectral representations and never using them. So in this section, I’m going to cover the reason for the season: singularity or absolute continuity of Gaussian measures.

The Feldman-Hájek theorem quoted is true on quite general sets of functions. However, if we are willing to restrict ourselves to a separable90 Hilbert91 space there is a much more refined version of the theorem that we can use.

Theorem 7 (Feldman-Hájek theorem (Taylor’s92 version)) Two Gaussian measures \(\mu_1\) (mean \(m_1\), covariance operator93 \(C_1\)) and \(\mu_2\) (mean \(m_2\), covariance operator \(C_2\)) on a separable Hilbert space \(X\) are absolutely continuous if and only if

The Cameron-Martin spaces associated with \(\mu_1\) and \(\mu_2\) are the same (considered as sets of functions. They usually will not have the same inner products.),

\(m_1 - m_2\) is in the94 Cameron-Martin space, and

The operator \(T = C_1^{-1/2}C_2C_1^{-1/2} - I\) is a Hilbert-Schmidt operator, that is it has a countable set of eigenvalues \(\delta_k\) and corresponding eigenfunctions \(\phi_k\) that satisfy \(\delta_k > -1\) and \[ \sum_{k=1}^{\infty}\delta_k^2 < \infty. \]

When these three conditions are fulfilled, the Radon-Nikodym derivative is \[ \frac{d\mu_2}{d\mu_1} = \exp\left(-\frac{1}{2}\sum_{k=1}^\infty \left(\frac{\delta_k}{1 + \delta_k}\eta_k^2 - \log(1+\delta_k)\right)\right], \] where \(\eta_k\) is an sequence of N(0,1) random variables95 96 (under \(\mu_1\)).

Otherwise, the two measures are singular.

This version of Feldman-Hájek is considerably more useful than its previous incarnation. The first condition basically says that the posterior means from the two priors will have the same smoothness and is rarely a problem. Typically the second condition is fulfilled in practice (for example, we always set the mean to zero).

The third condition is where all of the action is. This is, roughly speaking, a condition that says that \(C_1\) and \(C_2\) aren’t toooooo different. To understand this, we need to look a little at what the \(\delta_k\) values actually are. It turns out to actually be easier to ask about \(1+ \delta_k\), which are the eigenvalues of \(C_1^{-1/2}C_2 C_1^{-1/2}\). In that case, we are trying to find the orthonormal system of functions \(\phi_k\in X\) such that \[\begin{align*} C_1^{-1/2}C_2 C_1^{-1/2}\phi_k &= (1+\delta_k) \phi_k \\ C^{-1/2}C_2 \psi_k &= (1+\delta_k) C_1^{1/2}\psi_k \\ C_2\psi_k &=(1+\delta_k) C_1\psi_k, \end{align*}\] where \(\psi_k = C_1^{-1/2}\phi_k\).

Hence, we can roughly interpret the \(\delta_k\) as the eigenvalues of \[ C_1^{-1}C_2 - I. \] The Hilbert-Schmidt condition is then requiring that \(C_1^{-1}C_2\) is not infinitely far from the identity mapping.

A particularly nice version of this theorem occurs when \(C_1\) and \(C_2\) have the same eigenvectors. This is a fairly restrictive assumption, but we are going to end up using it later, so it’s worth specialising. In that case, assuming \(C_j\) has eigenvalues \(\lambda_k^{(j)}\) and corresponding \(L^2\)-orthogonal eigenfunctions \(\phi_k(\cdot)\), we can write97 \[ [C_jh](s) = \sum_{k=1}^\infty \lambda_k^{(j)} \langle\phi_k, h\rangle \phi_k(s). \] Using the orthogonality of the eigenfunctions, we can show98 that \[ [C_j^{\beta}h](s)=\sum_{k=1}^\infty (\lambda_k^{(j)})^\beta \langle\phi_k, h\rangle \phi_k(s). \]

With a bit of effort, we can see that \[ (C_1^{-1/2}C_2C_1^{-1/2} - I)h = \sum_{k=1}^\infty \frac{\lambda_k^{(2)} - \lambda_k^{(1)}}{\lambda_k^{(1)}} \langle\phi_k, h\rangle \phi_k \] and so \[ \delta_k = \frac{\lambda_k^{(2)} - \lambda_k^{(1)}}{\lambda_k^{(1)}}. \] From that, we get99 the KL divergence \[\begin{align*} \operatorname{KL}(\mu_1 || \mu_2) &= \mathbb{E}_{\mu_1}\log\left(\frac{d\mu_1}{d\mu_2}\right) \\ &=-\frac{1}{2}\sum_{k=1}^\infty \left(\frac{\delta_k}{1 + \delta_k} - \log(1+\delta_k)\right) \\ &= \frac{1}{2}\sum_{k=1}^\infty \left[\frac{\lambda_k^{(1)}}{\lambda_k^{(2)}} -1+ \log\left(\frac{\lambda_k^{(1)}}{\lambda_k^{(2)}}\right)\right]. \end{align*}\]

Possibly unsurprisingly, this is simply the sum of the one dimensional divergences \[ \sum_{k=1}^\infty\operatorname{KL}(N(0,\lambda_k^{(1)}) || N(0,\lambda_k^{(2)})). \] It’s fun to convince yourself that that \(\sum_{k=1}^\infty \delta_k^2 < \infty\) is sufficient to ensure the sum converges.

A convenient suffient condition for absolute continuity, which turns out to be necessary for Matérn fields

Ok. So I lied. I suggested that we’d use all of that spectral stuff in the last section. And we didn’t! Because I’m dastardly. But this time I promise we will!

It turns out that even with our fancy version of Feldman-Hájek, it can be difficult100 to work out whether two Gaussian processes are singular or equivalent. One of the big challenges is that the eigenvalues and eigenfunctions depend on the domain \(D\) and so we would, in principle, have to check this quite complex condition for every single domain.

Thankfully, there is an easy to parse sufficient condition that we can use that show when two GPs are equivalent on every bounded domain. These conditions are stated in terms of the spectral densities.

Theorem 8 (Sufficent condition for equivalence (Thm 4 of Skorokhod and Yadrenko)) Let \(u_1(\cdot)\) and \(u_2(\cdot)\) be mean-zero Gaussian processes with spectral densities \(f_j(\omega)\), \(j=1,2\). Assume that \(f_1(\omega)\|\omega\|^\alpha\) is bounded away from zero and infinity for some101 \(\alpha>0\) and \[ \int_{\mathbb{R}^d}\left(\frac{f_2(\omega) - f_1(\omega)}{f_1(\omega)}\right)^2\,d\omega < \infty. \] Then the joint distributions of \(\{u_1(s): s \in D\}\) and \(\{u_2(s): s \in D\}\) are equivalent measures for every bounded region \(D\).

The proof of this is pretty nifty. Essentially it constructs the operator \(T+I\) in a sneaky102 way and then bounds its trace on rectangle containing \(D\). That upper bound is finite precisely when the above integral is finite.

Now that we have a relatively simple condition for equivalence, let’s look at Matérn fields. In particular, we will assume \(u_j(\cdot)\), \(j=1,2\) are two Matérn GPs with the same smoothness parameter \(\nu\) and other parameters103 \((\kappa_j, \sigma_j)\). \[ \int_{\mathbb{R}^d}\left(\frac{f_2(\omega) - f_1(\omega)}{f_1(\omega)}\right)^2\,d\omega = \int_{\mathbb{R}^d}\left(\frac{\kappa_2^{2\nu}\sigma_2^2(\kappa_2^2 + \|\omega\|^2)^{-\nu - d/2} }{\kappa_1^{2\nu}\sigma_1^2(\kappa_1^2 + \|\omega\|^2)^{-\nu - d/2}}-1\right)^2\,d\omega. \] We can save ourselves some trouble by considering two cases separately.

Case 1: \(\kappa_1^{2\nu}\sigma_1^2 = \kappa_2^{2\nu}\sigma_2^2\).

In this case, we can make the change to spherical coordinates via the substitution \(r = \|\omega\|\) and, again to save my poor fingers, let’s set \(\alpha = \nu + d/2\). The condition becomes \[ \int_0^\infty\left[\left(\frac{\kappa_1^2 + r^2 }{\kappa_2^2 + r^2}\right)^{\alpha}-1\right]^2r^{d-1}\,dr < \infty. \] To check that this integral is finite, first note that, near \(r=0\), the integrand is104 \(\mathcal{O}({r^{d-1}})\), so there is no problem there. Near \(r = \infty\) (aka the other place bad stuff can happen), the integrand is \[ 2\alpha(\kappa_1^2 - \kappa_2^2)^2 r^{d-5} + \mathcal{O}(r^{d-7}). \] This is integrable for large \(r\) whenever105 \(d \leq 3\). Hence, the two fields are equivalent whenever \(d\leq 3\) and \(\kappa_1^{2\nu}\sigma_1^2 = \kappa_2^{2\nu}\sigma_2^2\). It is harder, but possible to show that the fields are singular when \(d>4\). The case with \(d=4\) is boring and nobody cares.

Case 2: \(\kappa_1^{2\nu}\sigma_1^2 \neq \kappa_2^{2\nu}\sigma_2^2\).

Let’s define \(\sigma_3 = \sigma_2(\kappa_2/\kappa_1)^\nu\). Then it’s clear that \(\kappa_1^{2\nu}\sigma_3^2 = \kappa_2^{2\nu}\sigma_2^2\) and therefore the Matérn field \(u_3\) with parameters \((\kappa_1, \sigma_3, \nu)\) is equivalent to \(u_2(\cdot)\).

We will now show that \(u_1\) and \(u_3\) are singular, which implies that \(u_1\) and \(u_2\) are singular. To do this, we just need to note that, as \(u_1\) and \(u_3\) have the same value of \(\kappa\), \[ u_3(s) = \frac{\sigma_3}{\sigma_1}u_1(s). \] We know, from the previous blog post, that \(u_3\) and \(u_1\) will be singular unless \(\sigma_1 = \sigma_3\), but this only happens when \(\kappa_1^{2\nu}\sigma_1^2 = \kappa_2^{2\nu}\sigma_2^2\), which is not true by assumption.

Hence we have proved the first part of the following Theorem due, in this form, to Zhang106 (2004) and Anderes107 (2010).

Theorem 9 (Thm 2 of Zhang (2004)) Two Gaussian process on \(\mathbb{R}^d\), \(d\leq 3\), with Matérn covariance functions with parameters \((\ell_j, \sigma_j, \nu)\), \(j=1,2\) induce equivalent Gaussian measures if and only if \[ \frac{\sigma_1^2}{\ell_1^{2\nu}} = \frac{\sigma_2^2}{\ell_2^{2\nu}}. \] When \(d > 4\), the measures are always singular (Anderes, 2010).

Part 3: Deriving the PC prior

With all of that in hand, we are finally (finally!) in a position to show that, in 3 or fewer dimensions, the PC prior distance is \(d(\kappa) = \kappa^{d/2}\). After this, we can put everything together! Hooray!

Approximating the Kullback-Leibler divergence for a Matérn random field

Now, you can find a proof of this in the appendix of our JASA paper, but to be honest it’s quite informal. But although you can sneak any old shite into JASA, this is a blog goddammit and a blog has integrity. So let’s do a significantly more rigorous proof of our argument.

To do this, we will need to find the KL divergence between \(u_1\), with parameters \((\kappa, \tau \kappa_1^{-\nu}, \nu)\) and a base model \(u_0\) with parameters \((\kappa_0, \tau \kappa_0^{-\nu}, \nu)\), where \(\kappa_0\) is some fixed, small number and \(\tau >0\) is fixed. We will actually be interested in the behaviour of the KL divergence as \(\kappa_0\) goes to zero. Why? Because \(\kappa_0 = 0\) is our base model.

The specific choice of standard deviation in both models ensures that \(\kappa^{2\nu}\sigma^2 = \kappa_0^{2\nu}\sigma_0^2\) and so the KL divergence is finite.

In order to approximate the KL divergence, we are going to find a basis that simultaneously diagonalises both processes. In the paper, we simply declared that we could do this. And, morally, we can. But as I said a blog aims to a higher standard than mere morality. Here we strive for meaningless rigour.

To that end, we are going to spend a moment thinking about how this can be done in a way that isn’t intrinsically tied to a given domain \(D\). There may well be a lot of different ways to do this, but the most obvious one is to notice that if \(u(\cdot)\) is periodic on the cube \([-L,L]^d\) for some \(L \gg 0\), then it can be considered as a GP on a \(d\)-dimensional torus. If \(L\) is large enough that \(D \subset [-L,L]^d\), then we might be able to focus on our cube and forget all about the specific domain \(D\).

A nice thing about periodic GPs is that we actually know their Karhunen-Loève108 representation. In particular, if \(c_p(\cdot)\) is a stationary covariance function on a torus, then we109 know that it’s eigenfunctions are \[ \phi_k(s) = e^{-\frac{2\pi i}{L} k^Th}, \quad k \in \mathbb{Z}^d \] and its eigenvalues are \[ \lambda_k = \int_{\mathbb{T}^d} e^{-\frac{2\pi i}{L} k^Th} c_p(h)\,dh. \] This gives110 \[ c_p(h) = \left(\frac{2\pi}{L}\right)^d \sum_{k \in \mathbb{Z}^d}\lambda_k e^{-\frac{2\pi i}{L} k^Th}. \]

Now we have some work to do. Firstly, our process is not periodic111 on \(\mathbb{R}^d\). That’s a bit of a barrier. Secondly, even if it were, we don’t actually know what \(\lambda_k\) is going to be. This is probably112 an issue.

So let’s make this sucker periodic. The trick is to note that, at long enough distances, \(u(s)\) and \(u(s')\) are almost uncorrelated. In particular, if \(\|s - s'\| \gg \ell\), then \(\operatorname{Cov}(u(s), u(s')) \approx 0\). This means that if we are interested in \(u(\cdot)\) on a fixed domain \(D\), then we can replace it with \(u_p(s)\) that is a GP where the covariance function \(c_p(\cdot)\) is the periodic extension of \(c(h)\) from \([-L,L]^d\) to \(\mathbb{R}^d\) (aka we just repeat it!).

This repetition won’t be noticed on \(D\) as long as \(L\) is big enough. But we can run into the small113 problem. This procedure can lead to a covariance function \(c_p(\cdot)\) that is not positive definite. Big problem. Huge.

It turns out that one way to fix this is is to use a smooth cutoff function \(\delta(h)\) that is 1 on \([-L,L]^d\) and 0 outside of \([-\gamma,\gamma]^d\), where \(L>0\) is big enough so that \(D \subset [-L, L]^d\) and \(\gamma > L\). We can then build the periodic extension of a stationary covariance function \(c(\cdot)\) as \[ c_p(h) = \sum_{k \in \mathbb{Z}^d}c(x + 2Lk)\delta(x + 2 Lk). \] It’s important114 to note that this is not the same thing as simply repeating the covariance function in a periodic manner. Near the boundaries (but outside of the domain) there will be some reach-around contamination. Bachmayr, Cohen, and Migliorati show that this does not work for general stationary covariance functions, but does work under the additional condition that \(\gamma\) is big enough and there exist some \(s \geq r > d/2\) and \(0 < \underline{C} \leq \overline{C} < \infty\) such that \[ \underline{C}(1 + \|\omega\|^2)^{-s} \leq f(\omega)\leq \overline{C}(1 + \|\omega\|^2)^{-r}. \] This condition obviously holds for the Matérn covariance function and Bachmayr, Graham, Nguyen, and Scheichl115 showed that \(\gamma > A(d, \nu)\ell\) for some explicit function \(A\) that only depends on \(d\) and \(\nu\) is sufficient to make this work.

The nice thing about this procedure is that \(c_p(s-s') = c(s-s')\) as long as \(s, s' \in D\), which means that our inference is going to be identical on our sample as it would be with the non-periodic covariance function! Splendid!

Now that we have made a valid periodic extension (and hence we know what the eigenfunctions are), we need to work out what the corresponding eigenvalues are.

We know that \[ \int_{\mathbb{R}^d} e^{-\frac{\pi i}{L}k^Th}c(h)\,dh = f\left(\frac{\pi}{L}k\right). \] But it is not clear what will happen when we take the Fourier transform of \(c_p(\cdot)\).

Thankfully, the convolution theorem is here to help us and we know that, if \(\theta(s) = 1 - \delta(s)\), then \[ \int_{\mathbb{R}^d} e^{-\frac{\pi i}{L}k^Th}(c(h) - c_p(h))\,dh = (\hat{\theta}*f)\left(\frac{\pi}{L}k\right), \] where \(*\) is the convolution operator.

In the perfect world, \((\hat{\theta}*f)(\omega)\) would be very close to zero, so we can just replace the Fourier transform of \(c_p\) with the Fourier transform of \(c\). And thank god we live in a perfect world.

The specifics here are a bit tedious116, but you can show that \((\hat{\theta}*f)(\omega) \rightarrow 0\) as \(\gamma \rightarrow \infty\). For Matérn fields, Bachmayr etc performed some heroic calculations to show that the difference is exponentially small as \(\gamma \rightarrow \infty\) and that, as long as \(\gamma > A(\nu) \ell\), everything is positive definite and lovely.

So after a bunch of effort and a bit of a literature dive, we have finally got a simultaneous eigenbasis and we can write our KL divergence as \[\begin{align*} \operatorname{KL}(u_1 || u_0) &= \frac{1}{2} \sum_{\omega \in \frac{2\pi}{L}\mathbb{Z}}\left[\frac{f_1(\omega)}{f_0(\omega)} - 1 - \log \left(\frac{f_1(\omega)}{f_0(\omega)}\right)\right] \\ &= \frac{1}{2} \sum_{\omega \in \frac{2\pi}{L}\mathbb{Z}}\left[\frac{(\kappa_0^2 + \|\omega\|^2)^\alpha}{(\kappa^2 + \|\omega\|^2)^\alpha} - 1 - \log \left(\frac{(\kappa_0^2 + \|\omega\|^2)^\alpha}{(\kappa^2 + \|\omega\|^2)^\alpha} \right)\right]. \end{align*}\] We can write this as \[ \operatorname{KL}(u_1 || u_0) =\frac{1}{2} \left(\frac{L \kappa}{2\pi}\right)^d \sum_{\omega \in \frac{2\pi}{L}\mathbb{Z}}\left(\left[\frac{(\kappa_0^2 + \|\omega\|^2)^\alpha}{(\kappa^2 + \|\omega\|^2)^\alpha} - 1 - \log \left(\frac{(\kappa_0^2 + \|\omega\|^2)^\alpha}{(\kappa^2 + \|\omega\|^2)^\alpha} \right)+\mathcal{O}(e^{-C\gamma})\right]\left(\frac{2\pi}{L \kappa}\right)^d\right) , \] for some constant \(C\) that you can actually work out but I really don’t need to. The important thing is that the error is exponentially small in \(\gamma\), which is very large and spiraling rapidly out towards infinity.

Then, noticing that the sum is just a trapezium rule approximation to a \(d\)-dimensional integral, we get, as \(\kappa_0 \rightarrow 0\) (and hence \(L, \gamma\rightarrow \infty\)), \[ \operatorname{KL}(u_1 || u_0) = \frac{1}{2} \left(\frac{L \kappa}{2\pi}\right)^d \int_{\mathbb{R}^d}\left[\frac{((\kappa_0/\kappa)^2 + \|\omega\|^2)^\alpha}{(1 + \|\omega\|^2)^\alpha} - 1 - \log \left(\frac{((\kappa_0/\kappa)^2 + \|\omega\|^2)^\alpha}{(1 + \|\omega\|^2)^\alpha} \right)\right] + \mathcal{O}(1). \] The integral converges whenever \(d \leq 3\).

This suggests that we can re-scale the distance by absorbing the \((L/(2\pi^d))\) into the constant in the PC prior, and get \[ d(\kappa) = \kappa^{d/2}. \]

This distance does not depend on the specific domain \(D\) (or the observation locations), which is an improvement over the PC prior I derived in the introduction. Instead, it only assumes that \(D\) is bounded, which isn’t really a big restriction in practice.

The PC prior for \((\sigma, \ell)\)

With all of this in hand, we can now construct the PC prior. Instead of working directly with \((\sigma, \ell)\), we will instead derive the prior for the estimable parameter \(\tau = \kappa^\nu \sigma\), and the non-estimable parameter \(\kappa\).

We know that \(\tau^2\) multiplies the covariance function of \(u(\cdot)\), so it makes sense to treat \(\tau\) like a standard deviation parameter. In this case, the PC prior is \[ p(\tau \mid \kappa) = \lambda_\tau(\kappa)e^{-\lambda_\tau(\kappa) \tau}. \] The canny among you would have noticed that I have made the scaling parameter \(\tau\) depend on \(\kappa\). I have done this because the quantity of interest that we want our prior to control is the marginal standard deviation \(\sigma = \kappa^\nu \tau\), which is a function of \(\kappa\). If we want to ensure \(\Pr(\sigma < U_\sigma) = \alpha_\sigma\), we need \[ \lambda_\tau(\kappa) = -\kappa^\nu\frac{\log \alpha_\sigma}{U_\sigma}. \]

We can now derive the PC prior for \(\kappa\). The distance that we just spent all that effort calculating, and an exponential prior on \(\kappa^{d/2}\) leads117 to the prior \[ p(\kappa) = \frac{d}{2}\lambda_\ell \kappa^{d/2-1}e^{-\lambda_\ell \kappa^{d/2}}. \] Note that in this case, \(\lambda_\ell\) does not depend on any other parameters: this is because \(\ell = \sqrt{8\nu}\kappa^{-1}\) is our identifiable parameter. If we require \(\Pr(\ell < L_\ell) = \alpha_\ell\), we get \[ \lambda_\ell = -\left(\frac{L_\ell}{\sqrt{8\nu}}\right)^{d/2} \log \alpha_\ell. \]

Hence the joint PC prior on \((\kappa, \tau)\), which is emphatically not the product of two independent priors, is \[ p(\kappa, \tau) = \frac{d}{2U_\sigma}\log (\alpha_\ell)\log(\alpha_\sigma)\left(\frac{L_\ell}{\sqrt{8\nu}}\right)^{d/2} \kappa^{\nu + d/2-1}\exp\left[-\left(\frac{L_\ell}{\sqrt{8\nu}}\right)^{d/2}| \log (\alpha_\ell)| \kappa^{d/2} -\frac{|\log \alpha_\sigma|}{U_\sigma} \tau\kappa^\nu\right]. \]

Great gowns, beautiful gowns.